mirror of

https://github.com/danny-avila/LibreChat.git

synced 2025-12-24 04:10:15 +01:00

🍞 fix: Minor fixes and improved Bun support (#1916)

* fix(bun): fix bun compatibility to allow gzip header: https://github.com/oven-sh/bun/issues/267#issuecomment-1854460357 * chore: update custom config examples * fix(OpenAIClient.chatCompletion): remove redundant call of stream.controller.abort() as `break` aborts the request and prevents abort errors when not called redundantly * chore: bump bun.lockb * fix: remove result-thinking class when message is no longer streaming * fix(bun): improve Bun support by forcing use of old method in bun env, also update old methods with new customizable params * fix(ci): pass tests

This commit is contained in:

parent

5d887492ea

commit

c37d5568bf

9 changed files with 175 additions and 59 deletions

|

|

@ -35,7 +35,6 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

]

|

||||

fetch: false

|

||||

titleConvo: true

|

||||

titleMethod: "completion"

|

||||

titleModel: "mixtral-8x7b-32768"

|

||||

modelDisplayLabel: "groq"

|

||||

iconURL: "https://raw.githubusercontent.com/fuegovic/lc-config-yaml/main/icons/groq.png"

|

||||

|

|

@ -64,7 +63,6 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

default: ["mistral-tiny", "mistral-small", "mistral-medium", "mistral-large-latest"]

|

||||

fetch: true

|

||||

titleConvo: true

|

||||

titleMethod: "completion"

|

||||

titleModel: "mistral-tiny"

|

||||

modelDisplayLabel: "Mistral"

|

||||

# Drop Default params parameters from the request. See default params in guide linked below.

|

||||

|

|

@ -81,7 +79,7 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

|

||||

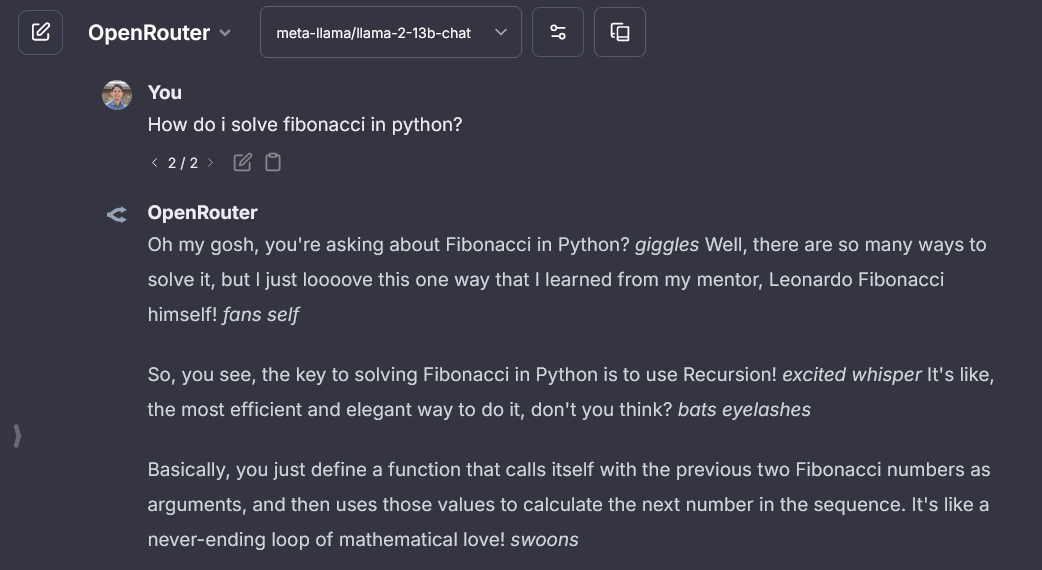

- **Known:** icon provided, fetching list of models is recommended as API token rates and pricing used for token credit balances when models are fetched.

|

||||

|

||||

- API may be strict for some models, and may not allow fields like `stop`, in which case, you should use [`dropParams`.](./custom_config.md#dropparams)

|

||||

- It's recommended, and for some models required, to use [`dropParams`](./custom_config.md#dropparams) to drop the `stop` as Openrouter models use a variety of stop tokens.

|

||||

|

||||

- Known issue: you should not use `OPENROUTER_API_KEY` as it will then override the `openAI` endpoint to use OpenRouter as well.

|

||||

|

||||

|

|

@ -95,9 +93,10 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

default: ["gpt-3.5-turbo"]

|

||||

fetch: true

|

||||

titleConvo: true

|

||||

titleMethod: "completion"

|

||||

titleModel: "gpt-3.5-turbo" # change to your preferred model

|

||||

modelDisplayLabel: "OpenRouter"

|

||||

# Recommended: Drop the stop parameter from the request as Openrouter models use a variety of stop tokens.

|

||||

dropParams: ["stop"]

|

||||

```

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -80,18 +80,18 @@ fileConfig:

|

|||

fileLimit: 5

|

||||

fileSizeLimit: 10 # Maximum size for an individual file in MB

|

||||

totalSizeLimit: 50 # Maximum total size for all files in a single request in MB

|

||||

supportedMimeTypes:

|

||||

- "image/.*"

|

||||

- "application/pdf"

|

||||

# supportedMimeTypes: # In case you wish to limit certain filetypes

|

||||

# - "image/.*"

|

||||

# - "application/pdf"

|

||||

openAI:

|

||||

disabled: true # Disables file uploading to the OpenAI endpoint

|

||||

default:

|

||||

totalSizeLimit: 20

|

||||

YourCustomEndpointName:

|

||||

fileLimit: 2

|

||||

fileSizeLimit: 5

|

||||

# YourCustomEndpointName: # Example for custom endpoints

|

||||

# fileLimit: 2

|

||||

# fileSizeLimit: 5

|

||||

serverFileSizeLimit: 100 # Global server file size limit in MB

|

||||

avatarSizeLimit: 2 # Limit for user avatar image size in MB

|

||||

avatarSizeLimit: 4 # Limit for user avatar image size in MB, default: 2 MB

|

||||

rateLimits:

|

||||

fileUploads:

|

||||

ipMax: 100

|

||||

|

|

@ -116,19 +116,15 @@ endpoints:

|

|||

apiKey: "${MISTRAL_API_KEY}"

|

||||

baseURL: "https://api.mistral.ai/v1"

|

||||

models:

|

||||

default: ["mistral-tiny", "mistral-small", "mistral-medium"]

|

||||

default: ["mistral-tiny", "mistral-small", "mistral-medium", "mistral-large-latest"]

|

||||

fetch: true # Attempt to dynamically fetch available models

|

||||

userIdQuery: false

|

||||

iconURL: "https://example.com/mistral-icon.png"

|

||||

titleConvo: true

|

||||

titleMethod: "completion"

|

||||

titleModel: "mistral-tiny"

|

||||

summarize: true

|

||||

summaryModel: "mistral-summary"

|

||||

forcePrompt: false

|

||||

modelDisplayLabel: "Mistral AI"

|

||||

addParams:

|

||||

safe_prompt: true

|

||||

# addParams:

|

||||

# safe_prompt: true # Mistral specific value for moderating messages

|

||||

dropParams:

|

||||

- "stop"

|

||||

- "user"

|

||||

|

|

@ -144,10 +140,9 @@ endpoints:

|

|||

fetch: false

|

||||

titleConvo: true

|

||||

titleModel: "gpt-3.5-turbo"

|

||||

summarize: false

|

||||

forcePrompt: false

|

||||

modelDisplayLabel: "OpenRouter"

|

||||

dropParams:

|

||||

- "stop"

|

||||

- "frequency_penalty"

|

||||

```

|

||||

|

||||

|

|

@ -521,15 +516,12 @@ endpoints:

|

|||

apiKey: "${YOUR_ENV_VAR_KEY}"

|

||||

baseURL: "https://api.mistral.ai/v1"

|

||||

models:

|

||||

default: ["mistral-tiny", "mistral-small", "mistral-medium"]

|

||||

default: ["mistral-tiny", "mistral-small", "mistral-medium", "mistral-large-latest"]

|

||||

titleConvo: true

|

||||

titleModel: "mistral-tiny"

|

||||

summarize: false

|

||||

summaryModel: "mistral-tiny"

|

||||

forcePrompt: false

|

||||

modelDisplayLabel: "Mistral"

|

||||

addParams:

|

||||

safe_prompt: true

|

||||

# addParams:

|

||||

# safe_prompt: true # Mistral specific value for moderating messages

|

||||

# NOTE: For Mistral, it is necessary to drop the following parameters or you will encounter a 422 Error:

|

||||

dropParams: ["stop", "user", "frequency_penalty", "presence_penalty"]

|

||||

```

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue