mirror of

https://github.com/danny-avila/LibreChat.git

synced 2025-12-17 17:00:15 +01:00

🤖 docs: Add Groq and other Compatible AI Endpoints (#1915)

* chore: bump bun dependencies * feat: make `groq` a known endpoint * docs: compatible ai endpoints * Update ai_endpoints.md * Update ai_endpoints.md

This commit is contained in:

parent

04eeb59d47

commit

5d887492ea

10 changed files with 134 additions and 7 deletions

BIN

bun.lockb

BIN

bun.lockb

Binary file not shown.

BIN

client/public/assets/groq.png

Normal file

BIN

client/public/assets/groq.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 4 KiB |

|

|

@ -30,6 +30,14 @@ export default function UnknownIcon({

|

||||||

);

|

);

|

||||||

} else if (currentEndpoint === KnownEndpoints.openrouter) {

|

} else if (currentEndpoint === KnownEndpoints.openrouter) {

|

||||||

return <img className={className} src="/assets/openrouter.png" alt="OpenRouter Icon" />;

|

return <img className={className} src="/assets/openrouter.png" alt="OpenRouter Icon" />;

|

||||||

|

} else if (currentEndpoint === KnownEndpoints.groq) {

|

||||||

|

return (

|

||||||

|

<img

|

||||||

|

className={context === 'landing' ? '' : className}

|

||||||

|

src="/assets/groq.png"

|

||||||

|

alt="Groq Cloud Icon"

|

||||||

|

/>

|

||||||

|

);

|

||||||

}

|

}

|

||||||

|

|

||||||

return <CustomMinimalIcon className={className} />;

|

return <CustomMinimalIcon className={className} />;

|

||||||

|

|

|

||||||

103

docs/install/configuration/ai_endpoints.md

Normal file

103

docs/install/configuration/ai_endpoints.md

Normal file

|

|

@ -0,0 +1,103 @@

|

||||||

|

---

|

||||||

|

title: ✅ Compatible AI Endpoints

|

||||||

|

description: List of known, compatible AI Endpoints with example setups for the `librechat.yaml` AKA the LibreChat Custom Config file.

|

||||||

|

weight: -9

|

||||||

|

---

|

||||||

|

|

||||||

|

# Compatible AI Endpoints

|

||||||

|

|

||||||

|

## Intro

|

||||||

|

|

||||||

|

This page lists known, compatible AI Endpoints with example setups for the `librechat.yaml` file, also known as the [Custom Config](./custom_config.md#custom-endpoint-object-structure) file.

|

||||||

|

|

||||||

|

In all of the examples, arbitrary environment variable names are defined but you can use any name you wish, as well as changing the value to `user_provided` to allow users to submit their own API key from the web UI.

|

||||||

|

|

||||||

|

Some of the endpoints are marked as **Known,** which means they might have special handling and/or an icon already provided in the app for you.

|

||||||

|

|

||||||

|

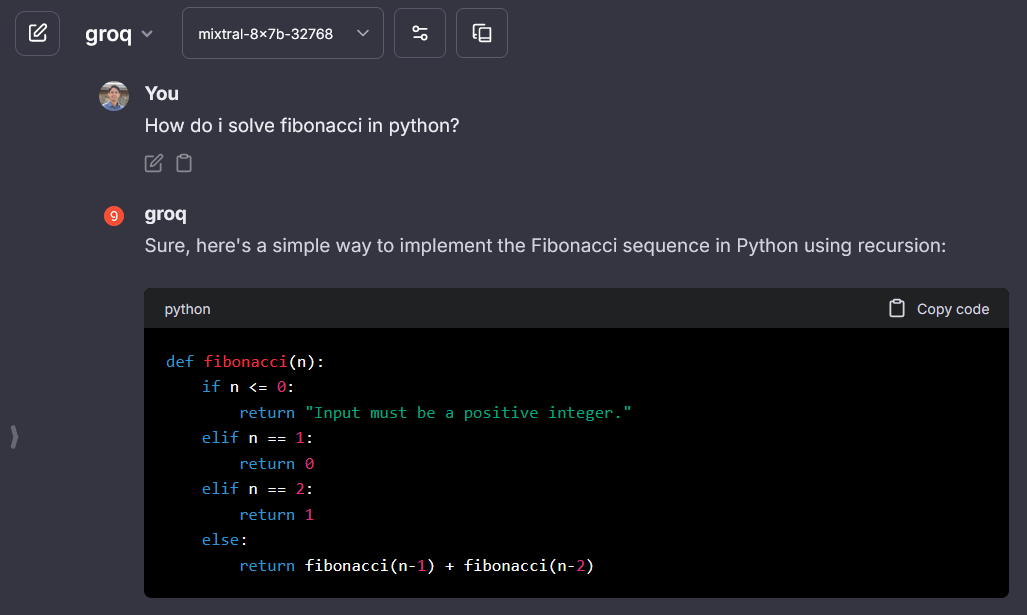

## Groq

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

- **Known:** icon provided.

|

||||||

|

|

||||||

|

- **Temperature:** If you set a temperature value of 0, it will be converted to 1e-8. If you run into any issues, please try setting the value to a float32 > 0 and <= 2.

|

||||||

|

|

||||||

|

- Groq is currently free but rate limited: 10 queries/minute, 100/hour.

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

- name: "groq"

|

||||||

|

apiKey: "${GROQ_API_KEY}"

|

||||||

|

baseURL: "https://api.groq.com/openai/v1/"

|

||||||

|

models:

|

||||||

|

default: [

|

||||||

|

"llama2-70b-4096",

|

||||||

|

"mixtral-8x7b-32768"

|

||||||

|

]

|

||||||

|

fetch: false

|

||||||

|

titleConvo: true

|

||||||

|

titleMethod: "completion"

|

||||||

|

titleModel: "mixtral-8x7b-32768"

|

||||||

|

modelDisplayLabel: "groq"

|

||||||

|

iconURL: "https://raw.githubusercontent.com/fuegovic/lc-config-yaml/main/icons/groq.png"

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

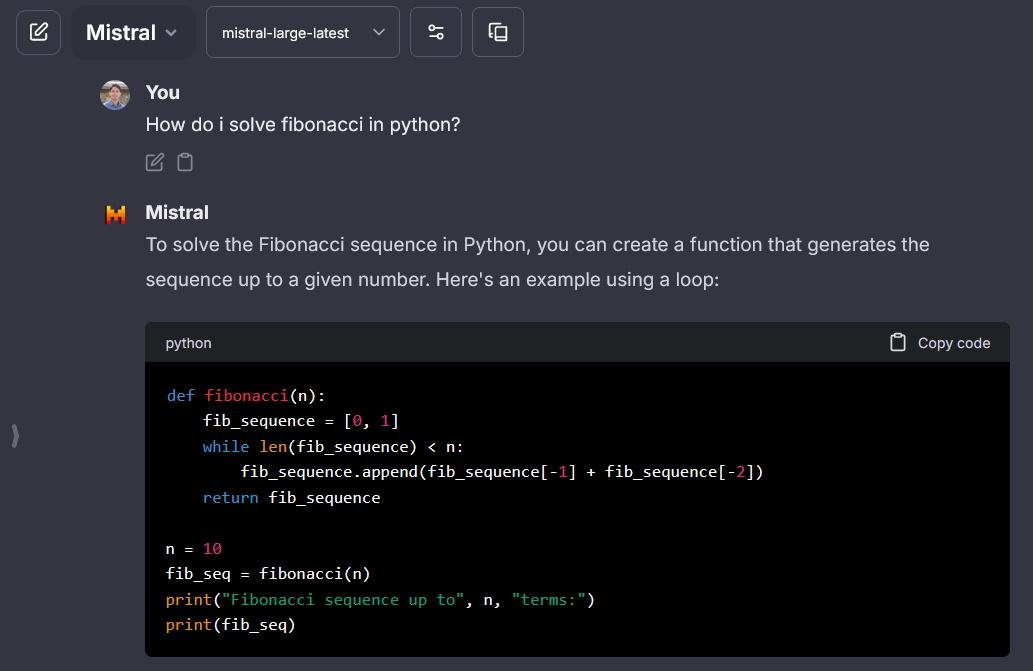

## Mistral AI

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

- **Known:** icon provided, special handling of message roles: system message is only allowed at the top of the messages payload.

|

||||||

|

|

||||||

|

- API is strict with unrecognized parameters and errors are not descriptive (usually "no body")

|

||||||

|

|

||||||

|

- The use of [`dropParams`](./custom_config.md#dropparams) to drop "stop", "user", "frequency_penalty", "presence_penalty" params is required.

|

||||||

|

|

||||||

|

- Allows fetching the models list, but be careful not to use embedding models for chat.

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

- name: "Mistral"

|

||||||

|

apiKey: "${MISTRAL_API_KEY}"

|

||||||

|

baseURL: "https://api.mistral.ai/v1"

|

||||||

|

models:

|

||||||

|

default: ["mistral-tiny", "mistral-small", "mistral-medium", "mistral-large-latest"]

|

||||||

|

fetch: true

|

||||||

|

titleConvo: true

|

||||||

|

titleMethod: "completion"

|

||||||

|

titleModel: "mistral-tiny"

|

||||||

|

modelDisplayLabel: "Mistral"

|

||||||

|

# Drop Default params parameters from the request. See default params in guide linked below.

|

||||||

|

# NOTE: For Mistral, it is necessary to drop the following parameters or you will encounter a 422 Error:

|

||||||

|

dropParams: ["stop", "user", "frequency_penalty", "presence_penalty"]

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

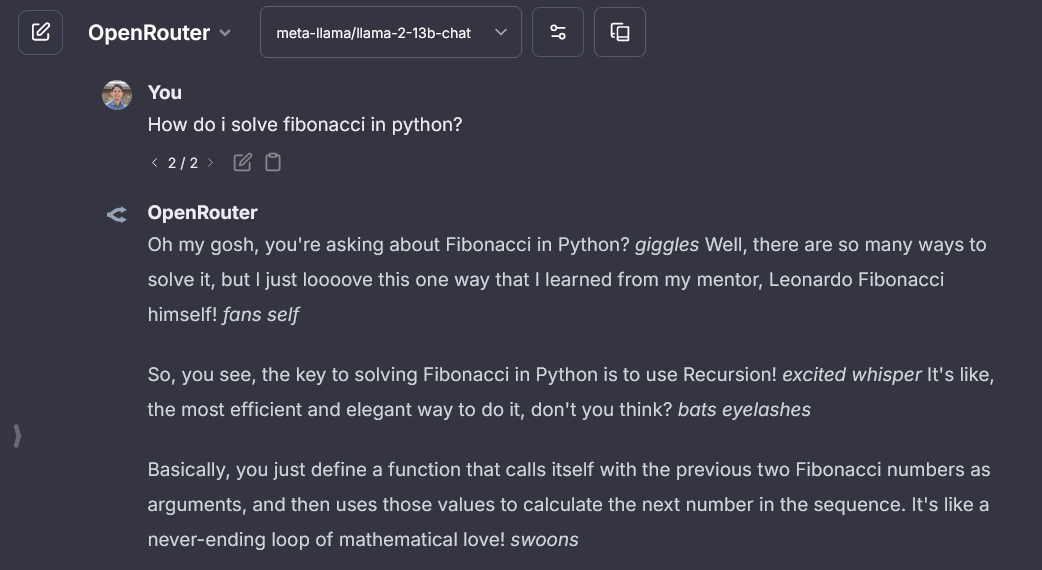

## Openrouter

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

- **Known:** icon provided, fetching list of models is recommended as API token rates and pricing used for token credit balances when models are fetched.

|

||||||

|

|

||||||

|

- API may be strict for some models, and may not allow fields like `stop`, in which case, you should use [`dropParams`.](./custom_config.md#dropparams)

|

||||||

|

|

||||||

|

- Known issue: you should not use `OPENROUTER_API_KEY` as it will then override the `openAI` endpoint to use OpenRouter as well.

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

- name: "OpenRouter"

|

||||||

|

# For `apiKey` and `baseURL`, you can use environment variables that you define.

|

||||||

|

# recommended environment variables:

|

||||||

|

# Known issue: you should not use `OPENROUTER_API_KEY` as it will then override the `openAI` endpoint to use OpenRouter as well.

|

||||||

|

apiKey: "${OPENROUTER_KEY}"

|

||||||

|

models:

|

||||||

|

default: ["gpt-3.5-turbo"]

|

||||||

|

fetch: true

|

||||||

|

titleConvo: true

|

||||||

|

titleMethod: "completion"

|

||||||

|

titleModel: "gpt-3.5-turbo" # change to your preferred model

|

||||||

|

modelDisplayLabel: "OpenRouter"

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -1,3 +1,9 @@

|

||||||

|

---

|

||||||

|

title: 🅰️ Azure OpenAI

|

||||||

|

description: Comprehensive guide for configuring Azure OpenAI through the `librechat.yaml` file AKA the LibreChat Config file. This document is your one-stop resource for understanding and customizing Azure settings and models.

|

||||||

|

weight: -10

|

||||||

|

---

|

||||||

|

|

||||||

# Azure OpenAI

|

# Azure OpenAI

|

||||||

|

|

||||||

**Azure OpenAI Integration for LibreChat**

|

**Azure OpenAI Integration for LibreChat**

|

||||||

|

|

|

||||||

|

|

@ -1,11 +1,13 @@

|

||||||

---

|

---

|

||||||

title: 🖥️ Custom Endpoints & Config

|

title: 🖥️ Custom Config

|

||||||

description: Comprehensive guide for configuring the `librechat.yaml` file AKA the LibreChat Config file. This document is your one-stop resource for understanding and customizing endpoints & other integrations.

|

description: Comprehensive guide for configuring the `librechat.yaml` file AKA the LibreChat Config file. This document is your one-stop resource for understanding and customizing endpoints & other integrations.

|

||||||

weight: -10

|

weight: -11

|

||||||

---

|

---

|

||||||

|

|

||||||

# LibreChat Configuration Guide

|

# LibreChat Configuration Guide

|

||||||

|

|

||||||

|

## Intro

|

||||||

|

|

||||||

Welcome to the guide for configuring the **librechat.yaml** file in LibreChat.

|

Welcome to the guide for configuring the **librechat.yaml** file in LibreChat.

|

||||||

|

|

||||||

This file enables the integration of custom AI endpoints, enabling you to connect with any AI provider compliant with OpenAI API standards.

|

This file enables the integration of custom AI endpoints, enabling you to connect with any AI provider compliant with OpenAI API standards.

|

||||||

|

|

@ -22,6 +24,10 @@ Stay tuned for ongoing enhancements to customize your LibreChat instance!

|

||||||

|

|

||||||

**Note:** To verify your YAML config, you can use online tools like [yamlchecker.com](https://yamlchecker.com/)

|

**Note:** To verify your YAML config, you can use online tools like [yamlchecker.com](https://yamlchecker.com/)

|

||||||

|

|

||||||

|

## Compatible Endpoints

|

||||||

|

|

||||||

|

Any API designed to be compatible with OpenAI's should be supported, but here is a list of **[known compatible endpoints](./ai_endpoints.md) including example setups.**

|

||||||

|

|

||||||

## Setup

|

## Setup

|

||||||

|

|

||||||

**The `librechat.yaml` file should be placed in the root of the project where the .env file is located.**

|

**The `librechat.yaml` file should be placed in the root of the project where the .env file is located.**

|

||||||

|

|

@ -564,6 +570,7 @@ endpoints:

|

||||||

- **Note**: The following are "known endpoints" (case-insensitive), which have icons provided for them. If your endpoint `name` matches the following names, you should omit this field:

|

- **Note**: The following are "known endpoints" (case-insensitive), which have icons provided for them. If your endpoint `name` matches the following names, you should omit this field:

|

||||||

- "Mistral"

|

- "Mistral"

|

||||||

- "OpenRouter"

|

- "OpenRouter"

|

||||||

|

- "Groq"

|

||||||

|

|

||||||

### **models**:

|

### **models**:

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,7 +1,7 @@

|

||||||

---

|

---

|

||||||

title: ⚙️ Environment Variables

|

title: ⚙️ Environment Variables

|

||||||

description: Comprehensive guide for configuring your application's environment with the `.env` file. This document is your one-stop resource for understanding and customizing the environment variables that will shape your application's behavior in different contexts.

|

description: Comprehensive guide for configuring your application's environment with the `.env` file. This document is your one-stop resource for understanding and customizing the environment variables that will shape your application's behavior in different contexts.

|

||||||

weight: -11

|

weight: -12

|

||||||

---

|

---

|

||||||

|

|

||||||

# .env File Configuration

|

# .env File Configuration

|

||||||

|

|

|

||||||

|

|

@ -7,11 +7,12 @@ weight: 2

|

||||||

# Configuration

|

# Configuration

|

||||||

|

|

||||||

* ⚙️ [Environment Variables](./dotenv.md)

|

* ⚙️ [Environment Variables](./dotenv.md)

|

||||||

* 🖥️ [Custom Endpoints & Config](./custom_config.md)

|

* 🖥️ [Custom Config](./custom_config.md)

|

||||||

|

* 🅰️ [Azure OpenAI](./azure_openai.md)

|

||||||

|

* ✅ [Compatible AI Endpoints](./ai_endpoints.md)

|

||||||

* 🐋 [Docker Compose Override](./docker_override.md)

|

* 🐋 [Docker Compose Override](./docker_override.md)

|

||||||

---

|

---

|

||||||

* 🤖 [AI Setup](./ai_setup.md)

|

* 🤖 [AI Setup](./ai_setup.md)

|

||||||

* 🅰️ [Azure OpenAI](./azure_openai.md)

|

|

||||||

* 🚅 [LiteLLM](./litellm.md)

|

* 🚅 [LiteLLM](./litellm.md)

|

||||||

* 💸 [Free AI APIs](./free_ai_apis.md)

|

* 💸 [Free AI APIs](./free_ai_apis.md)

|

||||||

---

|

---

|

||||||

|

|

|

||||||

|

|

@ -17,10 +17,11 @@ weight: 1

|

||||||

## **[Configuration](./configuration/index.md)**

|

## **[Configuration](./configuration/index.md)**

|

||||||

|

|

||||||

* ⚙️ [Environment Variables](./configuration/dotenv.md)

|

* ⚙️ [Environment Variables](./configuration/dotenv.md)

|

||||||

* 🖥️ [Custom Endpoints & Config](./configuration/custom_config.md)

|

* 🖥️ [Custom Config](./configuration/custom_config.md)

|

||||||

|

* 🅰️ [Azure OpenAI](./configuration/azure_openai.md)

|

||||||

|

* ✅ [Compatible AI Endpoints](./configuration/ai_endpoints.md)

|

||||||

* 🐋 [Docker Compose Override](./configuration/docker_override.md)

|

* 🐋 [Docker Compose Override](./configuration/docker_override.md)

|

||||||

* 🤖 [AI Setup](./configuration/ai_setup.md)

|

* 🤖 [AI Setup](./configuration/ai_setup.md)

|

||||||

* 🅰️ [Azure OpenAI](./configuration/azure_openai.md)

|

|

||||||

* 🚅 [LiteLLM](./configuration/litellm.md)

|

* 🚅 [LiteLLM](./configuration/litellm.md)

|

||||||

* 💸 [Free AI APIs](./configuration/free_ai_apis.md)

|

* 💸 [Free AI APIs](./configuration/free_ai_apis.md)

|

||||||

* 🛂 [Authentication System](./configuration/user_auth_system.md)

|

* 🛂 [Authentication System](./configuration/user_auth_system.md)

|

||||||

|

|

|

||||||

|

|

@ -174,6 +174,7 @@ export type TCustomConfig = z.infer<typeof configSchema>;

|

||||||

export enum KnownEndpoints {

|

export enum KnownEndpoints {

|

||||||

mistral = 'mistral',

|

mistral = 'mistral',

|

||||||

openrouter = 'openrouter',

|

openrouter = 'openrouter',

|

||||||

|

groq = 'groq',

|

||||||

}

|

}

|

||||||

|

|

||||||

export const defaultEndpoints: EModelEndpoint[] = [

|

export const defaultEndpoints: EModelEndpoint[] = [

|

||||||

|

|

|

||||||

Loading…

Add table

Add a link

Reference in a new issue