🤖feat: add multiple known endpoints (#1917)

* feat: add known endpoints * docs: add known endpoints * update ai_endpoints.md remove the groq icon from the example * Update ai_endpoints.md --------- Co-authored-by: Danny Avila <messagedaniel@protonmail.com>

13

.env.example

|

|

@ -54,6 +54,19 @@ DEBUG_CONSOLE=false

|

||||||

|

|

||||||

PROXY=

|

PROXY=

|

||||||

|

|

||||||

|

#===================================#

|

||||||

|

# Known Endpoints - librechat.yaml #

|

||||||

|

#===================================#

|

||||||

|

# https://docs.librechat.ai/install/configuration/ai_endpoints.html

|

||||||

|

|

||||||

|

# GROQ_API_KEY=

|

||||||

|

# MISTRAL_API_KEY=

|

||||||

|

# OPENROUTER_KEY=

|

||||||

|

# ANYSCALE_API_KEY=

|

||||||

|

# FIREWORKS_API_KEY=

|

||||||

|

# PERPLEXITY_API_KEY=

|

||||||

|

# TOGETHERAI_API_KEY=

|

||||||

|

|

||||||

#============#

|

#============#

|

||||||

# Anthropic #

|

# Anthropic #

|

||||||

#============#

|

#============#

|

||||||

|

|

|

||||||

BIN

client/public/assets/anyscale.png

Normal file

|

After Width: | Height: | Size: 69 KiB |

BIN

client/public/assets/fireworks.png

Normal file

|

After Width: | Height: | Size: 218 KiB |

|

Before Width: | Height: | Size: 4 KiB After Width: | Height: | Size: 23 KiB |

BIN

client/public/assets/ollama.png

Normal file

|

After Width: | Height: | Size: 39 KiB |

BIN

client/public/assets/perplexity.png

Normal file

|

After Width: | Height: | Size: 14 KiB |

BIN

client/public/assets/together.png

Normal file

|

After Width: | Height: | Size: 20 KiB |

|

|

@ -38,6 +38,46 @@ export default function UnknownIcon({

|

||||||

alt="Groq Cloud Icon"

|

alt="Groq Cloud Icon"

|

||||||

/>

|

/>

|

||||||

);

|

);

|

||||||

|

} else if (currentEndpoint === KnownEndpoints.anyscale) {

|

||||||

|

return (

|

||||||

|

<img

|

||||||

|

className={context === 'landing' ? '' : className}

|

||||||

|

src="/assets/anyscale.png"

|

||||||

|

alt="Anyscale Icon"

|

||||||

|

/>

|

||||||

|

);

|

||||||

|

} else if (currentEndpoint === KnownEndpoints.fireworks) {

|

||||||

|

return (

|

||||||

|

<img

|

||||||

|

className={context === 'landing' ? '' : className}

|

||||||

|

src="/assets/fireworks.png"

|

||||||

|

alt="Fireworks Icon"

|

||||||

|

/>

|

||||||

|

);

|

||||||

|

} else if (currentEndpoint === KnownEndpoints.ollama) {

|

||||||

|

return (

|

||||||

|

<img

|

||||||

|

className={context === 'landing' ? '' : className}

|

||||||

|

src="/assets/ollama.png"

|

||||||

|

alt="Ollama Icon"

|

||||||

|

/>

|

||||||

|

);

|

||||||

|

} else if (currentEndpoint === KnownEndpoints.perplexity) {

|

||||||

|

return (

|

||||||

|

<img

|

||||||

|

className={context === 'landing' ? '' : className}

|

||||||

|

src="/assets/perplexity.png"

|

||||||

|

alt="Perplexity Icon"

|

||||||

|

/>

|

||||||

|

);

|

||||||

|

} else if (currentEndpoint === KnownEndpoints['together.ai']) {

|

||||||

|

return (

|

||||||

|

<img

|

||||||

|

className={context === 'landing' ? '' : className}

|

||||||

|

src="/assets/together.png"

|

||||||

|

alt="together.ai Icon"

|

||||||

|

/>

|

||||||

|

);

|

||||||

}

|

}

|

||||||

|

|

||||||

return <CustomMinimalIcon className={className} />;

|

return <CustomMinimalIcon className={className} />;

|

||||||

|

|

|

||||||

|

|

@ -15,6 +15,7 @@ In all of the examples, arbitrary environment variable names are defined but you

|

||||||

Some of the endpoints are marked as **Known,** which means they might have special handling and/or an icon already provided in the app for you.

|

Some of the endpoints are marked as **Known,** which means they might have special handling and/or an icon already provided in the app for you.

|

||||||

|

|

||||||

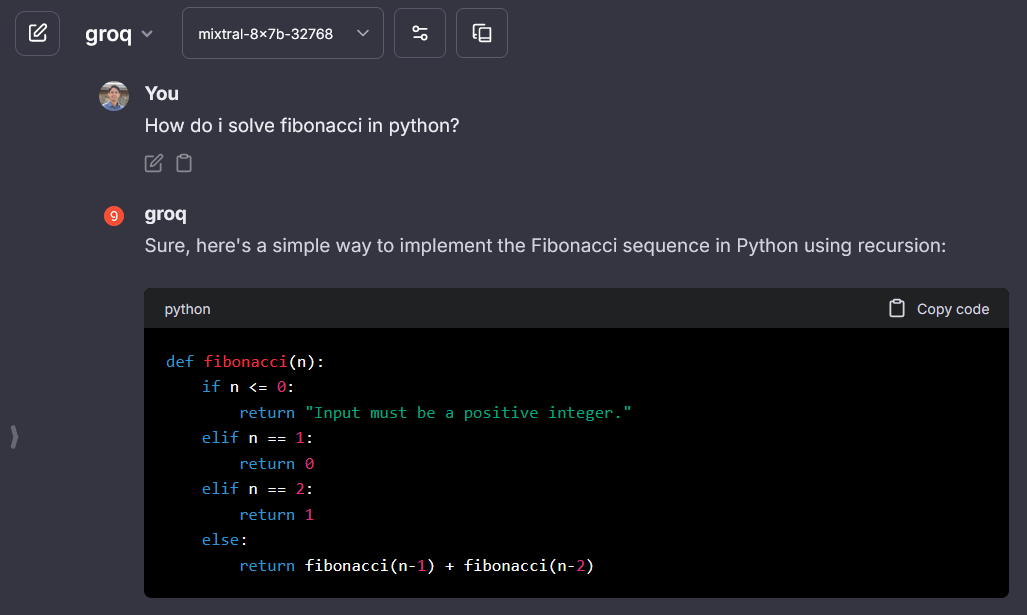

## Groq

|

## Groq

|

||||||

|

> groq API key: [wow.groq.com](https://wow.groq.com/)

|

||||||

|

|

||||||

**Notes:**

|

**Notes:**

|

||||||

|

|

||||||

|

|

@ -37,13 +38,13 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

||||||

titleConvo: true

|

titleConvo: true

|

||||||

titleModel: "mixtral-8x7b-32768"

|

titleModel: "mixtral-8x7b-32768"

|

||||||

modelDisplayLabel: "groq"

|

modelDisplayLabel: "groq"

|

||||||

iconURL: "https://raw.githubusercontent.com/fuegovic/lc-config-yaml/main/icons/groq.png"

|

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Mistral AI

|

## Mistral AI

|

||||||

|

> Mistral API key: [console.mistral.ai](https://console.mistral.ai/)

|

||||||

|

|

||||||

**Notes:**

|

**Notes:**

|

||||||

|

|

||||||

|

|

@ -74,14 +75,15 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

||||||

|

|

||||||

|

|

||||||

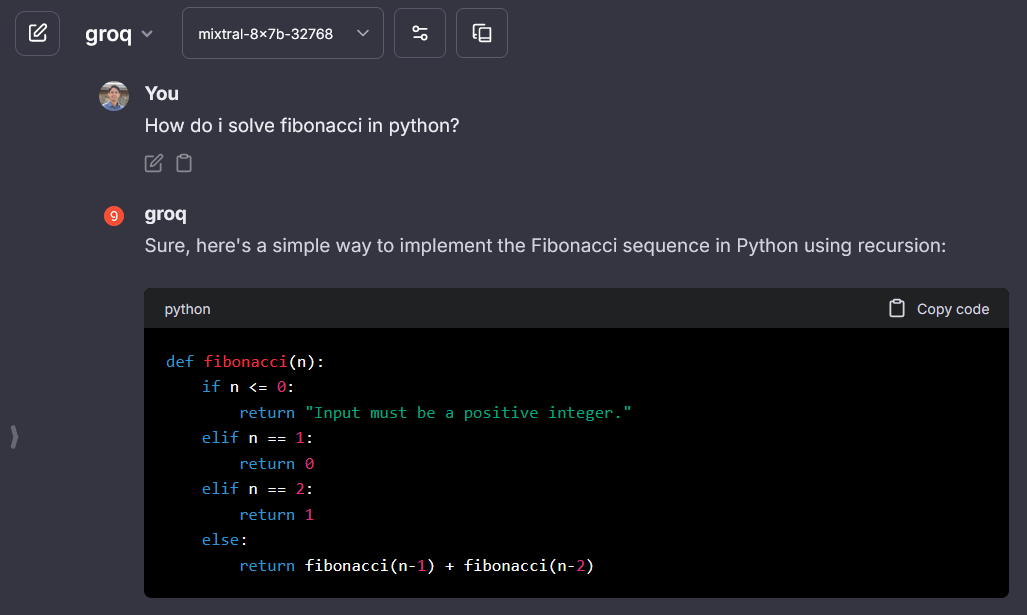

## Openrouter

|

## Openrouter

|

||||||

|

> OpenRouter API key: [openrouter.ai/keys](https://openrouter.ai/keys)

|

||||||

|

|

||||||

**Notes:**

|

**Notes:**

|

||||||

|

|

||||||

- **Known:** icon provided, fetching list of models is recommended as API token rates and pricing used for token credit balances when models are fetched.

|

- **Known:** icon provided, fetching list of models is recommended as API token rates and pricing used for token credit balances when models are fetched.

|

||||||

|

|

||||||

- It's recommended, and for some models required, to use [`dropParams`](./custom_config.md#dropparams) to drop the `stop` as Openrouter models use a variety of stop tokens.

|

- It's recommended, and for some models required, to use [`dropParams`](./custom_config.md#dropparams) to drop the `stop` parameter as Openrouter models use a variety of stop tokens.

|

||||||

|

|

||||||

- Known issue: you should not use `OPENROUTER_API_KEY` as it will then override the `openAI` endpoint to use OpenRouter as well.

|

- **Known issue:** you should not use `OPENROUTER_API_KEY` as it will then override the `openAI` endpoint to use OpenRouter as well.

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

- name: "OpenRouter"

|

- name: "OpenRouter"

|

||||||

|

|

@ -100,3 +102,162 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

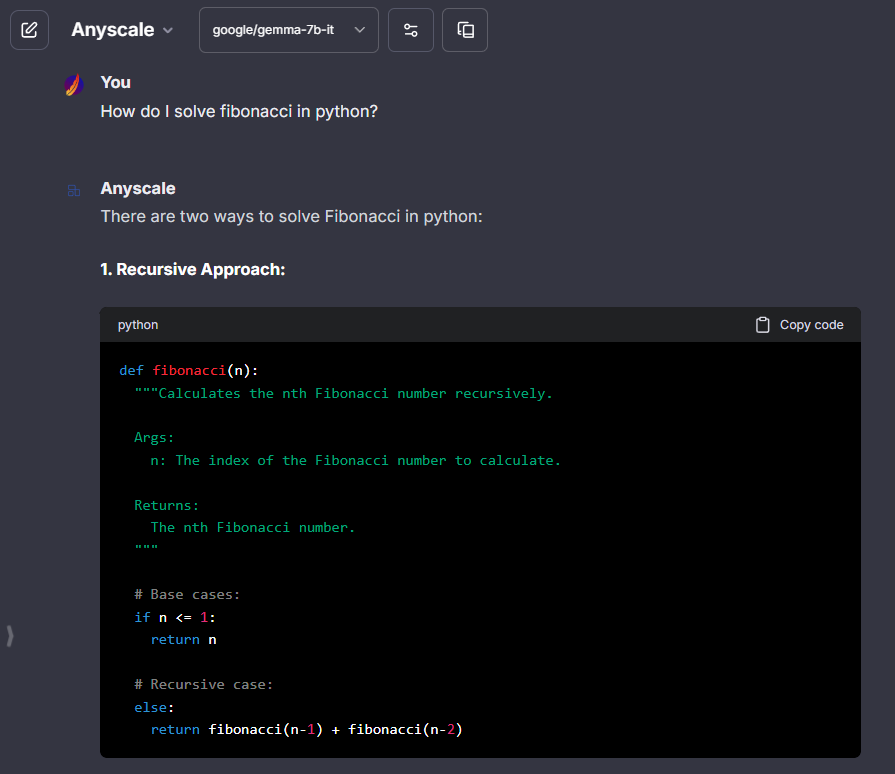

## Anyscale

|

||||||

|

> Anyscale API key: [anyscale.com/credentials](https://app.endpoints.anyscale.com/credentials)

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

- **Known:** icon provided, fetching list of models is recommended.

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

- name: "Anyscale"

|

||||||

|

apiKey: "${ANYSCALE_API_KEY}"

|

||||||

|

baseURL: "https://api.endpoints.anyscale.com/v1"

|

||||||

|

models:

|

||||||

|

default: [

|

||||||

|

"meta-llama/Llama-2-7b-chat-hf",

|

||||||

|

]

|

||||||

|

fetch: true

|

||||||

|

titleConvo: true

|

||||||

|

titleModel: "meta-llama/Llama-2-7b-chat-hf"

|

||||||

|

summarize: false

|

||||||

|

summaryModel: "meta-llama/Llama-2-7b-chat-hf"

|

||||||

|

forcePrompt: false

|

||||||

|

modelDisplayLabel: "Anyscale"

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

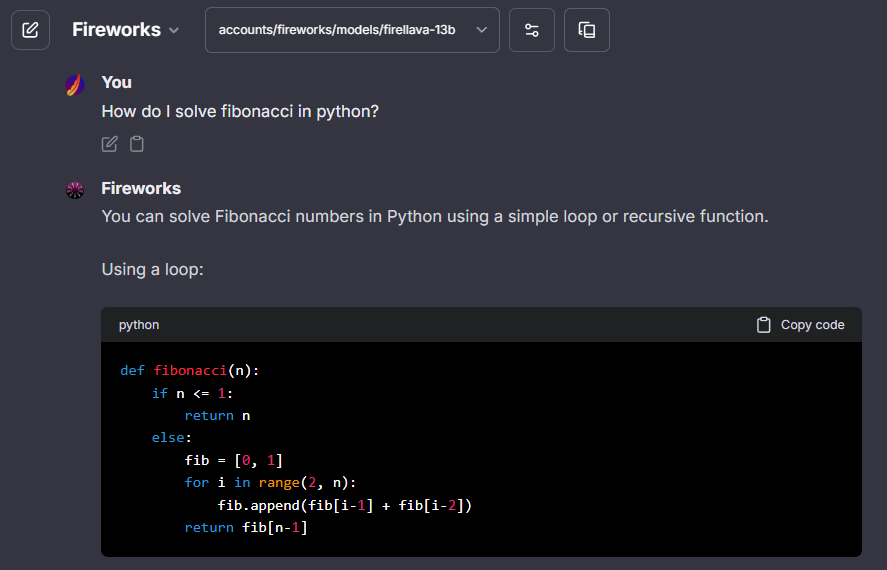

## Fireworks

|

||||||

|

> Fireworks API key: [fireworks.ai/api-keys](https://fireworks.ai/api-keys)

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

- **Known:** icon provided, fetching list of models is recommended.

|

||||||

|

- - API may be strict for some models, and may not allow fields like `user`, in which case, you should use [`dropParams`.](./custom_config.md#dropparams)

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

- name: "Fireworks"

|

||||||

|

apiKey: "${FIREWORKS_API_KEY}"

|

||||||

|

baseURL: "https://api.fireworks.ai/inference/v1"

|

||||||

|

models:

|

||||||

|

default: [

|

||||||

|

"accounts/fireworks/models/mixtral-8x7b-instruct",

|

||||||

|

]

|

||||||

|

fetch: true

|

||||||

|

titleConvo: true

|

||||||

|

titleModel: "accounts/fireworks/models/llama-v2-7b-chat"

|

||||||

|

summarize: false

|

||||||

|

summaryModel: "accounts/fireworks/models/llama-v2-7b-chat"

|

||||||

|

forcePrompt: false

|

||||||

|

modelDisplayLabel: "Fireworks"

|

||||||

|

dropParams: ["user"]

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

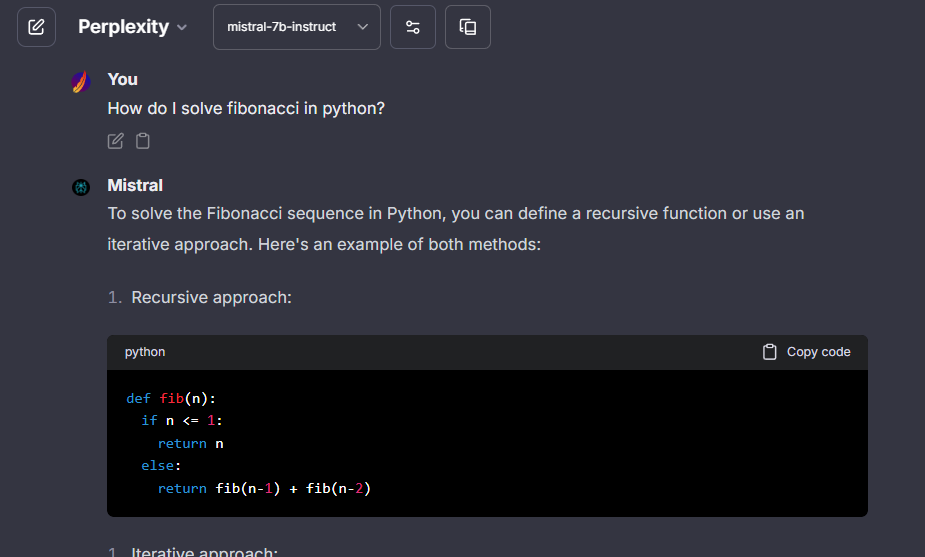

## Perplexity

|

||||||

|

> Perplexity API key: [perplexity.ai/settings/api](https://www.perplexity.ai/settings/api)

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

- **Known:** icon provided.

|

||||||

|

- **Known issue:** fetching list of models is not supported.

|

||||||

|

- API may be strict for some models, and may not allow fields like `stop` and `frequency_penalty` may cause an error when set to 0, in which case, you should use [`dropParams`.](./custom_config.md#dropparams)

|

||||||

|

- The example includes a model list, which was last updated on February 27, 2024, for your convenience.

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

- name: "Perplexity"

|

||||||

|

apiKey: "${PERPLEXITY_API_KEY}"

|

||||||

|

baseURL: "https://api.perplexity.ai/"

|

||||||

|

models:

|

||||||

|

default: [

|

||||||

|

"mistral-7b-instruct",

|

||||||

|

"sonar-small-chat",

|

||||||

|

"sonar-small-online",

|

||||||

|

"sonar-medium-chat",

|

||||||

|

"sonar-medium-online"

|

||||||

|

]

|

||||||

|

fetch: false # fetching list of models is not supported

|

||||||

|

titleConvo: true

|

||||||

|

titleModel: "sonar-medium-chat"

|

||||||

|

summarize: false

|

||||||

|

summaryModel: "sonar-medium-chat"

|

||||||

|

forcePrompt: false

|

||||||

|

dropParams: ["stop", "frequency_penalty"]

|

||||||

|

modelDisplayLabel: "Perplexity"

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## together.ai

|

||||||

|

> together.ai API key: [api.together.xyz/settings/api-keys](https://api.together.xyz/settings/api-keys)

|

||||||

|

|

||||||

|

**Notes:**

|

||||||

|

|

||||||

|

- **Known:** icon provided.

|

||||||

|

- **Known issue:** fetching list of models is not supported.

|

||||||

|

- The example includes a model list, which was last updated on February 27, 2024, for your convenience.

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

- name: "together.ai"

|

||||||

|

apiKey: "${TOGETHERAI_API_KEY}"

|

||||||

|

baseURL: "https://api.together.xyz"

|

||||||

|

models:

|

||||||

|

default: [

|

||||||

|

"zero-one-ai/Yi-34B-Chat",

|

||||||

|

"Austism/chronos-hermes-13b",

|

||||||

|

"DiscoResearch/DiscoLM-mixtral-8x7b-v2",

|

||||||

|

"Gryphe/MythoMax-L2-13b",

|

||||||

|

"lmsys/vicuna-13b-v1.5",

|

||||||

|

"lmsys/vicuna-7b-v1.5",

|

||||||

|

"lmsys/vicuna-13b-v1.5-16k",

|

||||||

|

"codellama/CodeLlama-13b-Instruct-hf",

|

||||||

|

"codellama/CodeLlama-34b-Instruct-hf",

|

||||||

|

"codellama/CodeLlama-70b-Instruct-hf",

|

||||||

|

"codellama/CodeLlama-7b-Instruct-hf",

|

||||||

|

"togethercomputer/llama-2-13b-chat",

|

||||||

|

"togethercomputer/llama-2-70b-chat",

|

||||||

|

"togethercomputer/llama-2-7b-chat",

|

||||||

|

"NousResearch/Nous-Capybara-7B-V1p9",

|

||||||

|

"NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO",

|

||||||

|

"NousResearch/Nous-Hermes-2-Mixtral-8x7B-SFT",

|

||||||

|

"NousResearch/Nous-Hermes-Llama2-70b",

|

||||||

|

"NousResearch/Nous-Hermes-llama-2-7b",

|

||||||

|

"NousResearch/Nous-Hermes-Llama2-13b",

|

||||||

|

"NousResearch/Nous-Hermes-2-Yi-34B",

|

||||||

|

"openchat/openchat-3.5-1210",

|

||||||

|

"Open-Orca/Mistral-7B-OpenOrca",

|

||||||

|

"togethercomputer/Qwen-7B-Chat",

|

||||||

|

"snorkelai/Snorkel-Mistral-PairRM-DPO",

|

||||||

|

"togethercomputer/alpaca-7b",

|

||||||

|

"togethercomputer/falcon-40b-instruct",

|

||||||

|

"togethercomputer/falcon-7b-instruct",

|

||||||

|

"togethercomputer/GPT-NeoXT-Chat-Base-20B",

|

||||||

|

"togethercomputer/Llama-2-7B-32K-Instruct",

|

||||||

|

"togethercomputer/Pythia-Chat-Base-7B-v0.16",

|

||||||

|

"togethercomputer/RedPajama-INCITE-Chat-3B-v1",

|

||||||

|

"togethercomputer/RedPajama-INCITE-7B-Chat",

|

||||||

|

"togethercomputer/StripedHyena-Nous-7B",

|

||||||

|

"Undi95/ReMM-SLERP-L2-13B",

|

||||||

|

"Undi95/Toppy-M-7B",

|

||||||

|

"WizardLM/WizardLM-13B-V1.2",

|

||||||

|

"garage-bAInd/Platypus2-70B-instruct",

|

||||||

|

"mistralai/Mistral-7B-Instruct-v0.1",

|

||||||

|

"mistralai/Mistral-7B-Instruct-v0.2",

|

||||||

|

"mistralai/Mixtral-8x7B-Instruct-v0.1",

|

||||||

|

"teknium/OpenHermes-2-Mistral-7B",

|

||||||

|

"teknium/OpenHermes-2p5-Mistral-7B",

|

||||||

|

"upstage/SOLAR-10.7B-Instruct-v1.0"

|

||||||

|

]

|

||||||

|

fetch: false # fetching list of models is not supported

|

||||||

|

titleConvo: true

|

||||||

|

titleModel: "togethercomputer/llama-2-7b-chat"

|

||||||

|

summarize: false

|

||||||

|

summaryModel: "togethercomputer/llama-2-7b-chat"

|

||||||

|

forcePrompt: false

|

||||||

|

modelDisplayLabel: "together.ai"

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -106,6 +106,15 @@ UID=1000

|

||||||

GID=1000

|

GID=1000

|

||||||

```

|

```

|

||||||

|

|

||||||

|

### librechat.yaml path

|

||||||

|

Set an alternative path for the LibreChat config file

|

||||||

|

|

||||||

|

> Note: leave commented out to have LibreChat look for the config file in the root folder (default behavior)

|

||||||

|

|

||||||

|

```sh

|

||||||

|

CONFIG_PATH="/alternative/path/to/librechat.yaml"

|

||||||

|

```

|

||||||

|

|

||||||

## Endpoints

|

## Endpoints

|

||||||

In this section you can configure the endpoints and models selection, their API keys, and the proxy and reverse proxy settings for the endpoints that support it.

|

In this section you can configure the endpoints and models selection, their API keys, and the proxy and reverse proxy settings for the endpoints that support it.

|

||||||

|

|

||||||

|

|

@ -118,6 +127,20 @@ ENDPOINTS=openAI,assistants,azureOpenAI,bingAI,chatGPTBrowser,google,gptPlugins,

|

||||||

PROXY=

|

PROXY=

|

||||||

```

|

```

|

||||||

|

|

||||||

|

### Known Endpoints - librechat.yaml

|

||||||

|

- see: [AI Endpoints](./ai_endpoints.md)

|

||||||

|

- see also: [Custom Configuration](./custom_config.md)

|

||||||

|

|

||||||

|

```sh

|

||||||

|

GROQ_API_KEY=

|

||||||

|

MISTRAL_API_KEY=

|

||||||

|

OPENROUTER_KEY=

|

||||||

|

ANYSCALE_API_KEY=

|

||||||

|

FIREWORKS_API_KEY=

|

||||||

|

PERPLEXITY_API_KEY=

|

||||||

|

TOGETHERAI_API_KEY=

|

||||||

|

```

|

||||||

|

|

||||||

### Anthropic

|

### Anthropic

|

||||||

see: [Anthropic Endpoint](./ai_setup.md#anthropic)

|

see: [Anthropic Endpoint](./ai_setup.md#anthropic)

|

||||||

- You can request an access key from https://console.anthropic.com/

|

- You can request an access key from https://console.anthropic.com/

|

||||||

|

|

|

||||||

|

|

@ -171,11 +171,16 @@ export const configSchema = z.object({

|

||||||

|

|

||||||

export type TCustomConfig = z.infer<typeof configSchema>;

|

export type TCustomConfig = z.infer<typeof configSchema>;

|

||||||

|

|

||||||

export enum KnownEndpoints {

|

export const KnownEndpoints = {

|

||||||

mistral = 'mistral',

|

mistral: 'mistral',

|

||||||

openrouter = 'openrouter',

|

openrouter: 'openrouter',

|

||||||

groq = 'groq',

|

groq: 'groq',

|

||||||

}

|

anyscale: 'anyscale',

|

||||||

|

fireworks: 'fireworks',

|

||||||

|

ollama: 'ollama',

|

||||||

|

perplexity: 'perplexity',

|

||||||

|

'together.ai': 'together.ai',

|

||||||

|

} as const;

|

||||||

|

|

||||||

export const defaultEndpoints: EModelEndpoint[] = [

|

export const defaultEndpoints: EModelEndpoint[] = [

|

||||||

EModelEndpoint.openAI,

|

EModelEndpoint.openAI,

|

||||||

|

|

|

||||||