mirror of

https://github.com/danny-avila/LibreChat.git

synced 2025-12-17 00:40:14 +01:00

✋ feat: Stop Sequences for Conversations & Presets (#2536)

* feat: `stop` conversation parameter * feat: Tag primitive * feat: dynamic tags * refactor: update tag styling * feat: add stop sequences to OpenAI settings * fix(Presentation): prevent `SidePanel` re-renders that flicker side panel * refactor: use stop placeholder * feat: type and schema update for `stop` and `TPreset` in generation param related types * refactor: pass conversation to dynamic settings * refactor(OpenAIClient): remove default handling for `modelOptions.stop` * docs: fix Google AI Setup formatting * feat: current_model * docs: WIP update * fix(ChatRoute): prevent default preset override before `hasSetConversation.current` becomes true by including latest conversation state as template * docs: update docs with more info on `stop` * chore: bump config_version * refactor: CURRENT_MODEL handling

This commit is contained in:

parent

4121818124

commit

099aa9dead

29 changed files with 690 additions and 93 deletions

|

|

@ -1,6 +1,7 @@

|

|||

const OpenAI = require('openai');

|

||||

const { HttpsProxyAgent } = require('https-proxy-agent');

|

||||

const {

|

||||

Constants,

|

||||

ImageDetail,

|

||||

EModelEndpoint,

|

||||

resolveHeaders,

|

||||

|

|

@ -20,9 +21,9 @@ const {

|

|||

const {

|

||||

truncateText,

|

||||

formatMessage,

|

||||

createContextHandlers,

|

||||

CUT_OFF_PROMPT,

|

||||

titleInstruction,

|

||||

createContextHandlers,

|

||||

} = require('./prompts');

|

||||

const { encodeAndFormat } = require('~/server/services/Files/images/encode');

|

||||

const { handleOpenAIErrors } = require('./tools/util');

|

||||

|

|

@ -200,16 +201,6 @@ class OpenAIClient extends BaseClient {

|

|||

|

||||

this.setupTokens();

|

||||

|

||||

if (!this.modelOptions.stop && !this.isVisionModel) {

|

||||

const stopTokens = [this.startToken];

|

||||

if (this.endToken && this.endToken !== this.startToken) {

|

||||

stopTokens.push(this.endToken);

|

||||

}

|

||||

stopTokens.push(`\n${this.userLabel}:`);

|

||||

stopTokens.push('<|diff_marker|>');

|

||||

this.modelOptions.stop = stopTokens;

|

||||

}

|

||||

|

||||

if (reverseProxy) {

|

||||

this.completionsUrl = reverseProxy;

|

||||

this.langchainProxy = extractBaseURL(reverseProxy);

|

||||

|

|

@ -729,7 +720,10 @@ class OpenAIClient extends BaseClient {

|

|||

|

||||

const { OPENAI_TITLE_MODEL } = process.env ?? {};

|

||||

|

||||

const model = this.options.titleModel ?? OPENAI_TITLE_MODEL ?? 'gpt-3.5-turbo';

|

||||

let model = this.options.titleModel ?? OPENAI_TITLE_MODEL ?? 'gpt-3.5-turbo';

|

||||

if (model === Constants.CURRENT_MODEL) {

|

||||

model = this.modelOptions.model;

|

||||

}

|

||||

|

||||

const modelOptions = {

|

||||

// TODO: remove the gpt fallback and make it specific to endpoint

|

||||

|

|

@ -851,7 +845,11 @@ ${convo}

|

|||

|

||||

// TODO: remove the gpt fallback and make it specific to endpoint

|

||||

const { OPENAI_SUMMARY_MODEL = 'gpt-3.5-turbo' } = process.env ?? {};

|

||||

const model = this.options.summaryModel ?? OPENAI_SUMMARY_MODEL;

|

||||

let model = this.options.summaryModel ?? OPENAI_SUMMARY_MODEL;

|

||||

if (model === Constants.CURRENT_MODEL) {

|

||||

model = this.modelOptions.model;

|

||||

}

|

||||

|

||||

const maxContextTokens =

|

||||

getModelMaxTokens(

|

||||

model,

|

||||

|

|

|

|||

|

|

@ -88,6 +88,7 @@ const conversationPreset = {

|

|||

instructions: {

|

||||

type: String,

|

||||

},

|

||||

stop: { type: [{ type: String }], default: undefined },

|

||||

};

|

||||

|

||||

const agentOptions = {

|

||||

|

|

|

|||

|

|

@ -98,6 +98,7 @@ export default function HeaderOptions() {

|

|||

>

|

||||

<div className="px-4 py-4">

|

||||

<EndpointSettings

|

||||

className="[&::-webkit-scrollbar]:w-2"

|

||||

conversation={conversation}

|

||||

setOption={setOption}

|

||||

isMultiChat={true}

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

import { useEffect } from 'react';

|

||||

import { useEffect, useMemo } from 'react';

|

||||

import { useRecoilValue } from 'recoil';

|

||||

import { FileSources } from 'librechat-data-provider';

|

||||

import type { ExtendedFile } from '~/common';

|

||||

|

|

@ -49,12 +49,16 @@ export default function Presentation({

|

|||

}, [mutateAsync]);

|

||||

|

||||

const isActive = canDrop && isOver;

|

||||

const resizableLayout = localStorage.getItem('react-resizable-panels:layout');

|

||||

const collapsedPanels = localStorage.getItem('react-resizable-panels:collapsed');

|

||||

|

||||

const defaultLayout = resizableLayout ? JSON.parse(resizableLayout) : undefined;

|

||||

const defaultCollapsed = collapsedPanels ? JSON.parse(collapsedPanels) : undefined;

|

||||

const fullCollapse = localStorage.getItem('fullPanelCollapse') === 'true';

|

||||

const defaultLayout = useMemo(() => {

|

||||

const resizableLayout = localStorage.getItem('react-resizable-panels:layout');

|

||||

return resizableLayout ? JSON.parse(resizableLayout) : undefined;

|

||||

}, []);

|

||||

const defaultCollapsed = useMemo(() => {

|

||||

const collapsedPanels = localStorage.getItem('react-resizable-panels:collapsed');

|

||||

return collapsedPanels ? JSON.parse(collapsedPanels) : undefined;

|

||||

}, []);

|

||||

const fullCollapse = useMemo(() => localStorage.getItem('fullPanelCollapse') === 'true', []);

|

||||

|

||||

const layout = () => (

|

||||

<div className="transition-width relative flex h-full w-full flex-1 flex-col items-stretch overflow-hidden bg-white pt-0 dark:bg-gray-800">

|

||||

|

|

|

|||

|

|

@ -27,9 +27,7 @@ export default function Settings({

|

|||

|

||||

if (OptionComponent) {

|

||||

return (

|

||||

<div

|

||||

className={cn('hide-scrollbar h-[500px] overflow-y-auto md:mb-2 md:h-[350px]', className)}

|

||||

>

|

||||

<div className={cn('h-[500px] overflow-y-auto md:mb-2 md:h-[350px]', className)}>

|

||||

<OptionComponent

|

||||

conversation={conversation}

|

||||

setOption={setOption}

|

||||

|

|

|

|||

|

|

@ -1,5 +1,12 @@

|

|||

import { useMemo } from 'react';

|

||||

import TextareaAutosize from 'react-textarea-autosize';

|

||||

import { ImageDetail, imageDetailNumeric, imageDetailValue } from 'librechat-data-provider';

|

||||

import * as InputNumberPrimitive from 'rc-input-number';

|

||||

import {

|

||||

EModelEndpoint,

|

||||

ImageDetail,

|

||||

imageDetailNumeric,

|

||||

imageDetailValue,

|

||||

} from 'librechat-data-provider';

|

||||

import {

|

||||

Input,

|

||||

Label,

|

||||

|

|

@ -10,12 +17,15 @@ import {

|

|||

SelectDropDown,

|

||||

HoverCardTrigger,

|

||||

} from '~/components/ui';

|

||||

import { cn, defaultTextProps, optionText, removeFocusOutlines } from '~/utils/';

|

||||

import { cn, defaultTextProps, optionText, removeFocusOutlines } from '~/utils';

|

||||

import { DynamicTags } from '~/components/SidePanel/Parameters';

|

||||

import { useLocalize, useDebouncedInput } from '~/hooks';

|

||||

import type { TModelSelectProps } from '~/common';

|

||||

import OptionHover from './OptionHover';

|

||||

import { ESide } from '~/common';

|

||||

|

||||

type OnInputNumberChange = InputNumberPrimitive.InputNumberProps['onChange'];

|

||||

|

||||

export default function Settings({ conversation, setOption, models, readonly }: TModelSelectProps) {

|

||||

const localize = useLocalize();

|

||||

const {

|

||||

|

|

@ -62,6 +72,12 @@ export default function Settings({ conversation, setOption, models, readonly }:

|

|||

initialValue: presP,

|

||||

});

|

||||

|

||||

const optionEndpoint = useMemo(() => endpointType ?? endpoint, [endpoint, endpointType]);

|

||||

const isOpenAI = useMemo(

|

||||

() => optionEndpoint === EModelEndpoint.openAI || optionEndpoint === EModelEndpoint.azureOpenAI,

|

||||

[optionEndpoint],

|

||||

);

|

||||

|

||||

if (!conversation) {

|

||||

return null;

|

||||

}

|

||||

|

|

@ -70,8 +86,6 @@ export default function Settings({ conversation, setOption, models, readonly }:

|

|||

const setResendFiles = setOption('resendFiles');

|

||||

const setImageDetail = setOption('imageDetail');

|

||||

|

||||

const optionEndpoint = endpointType ?? endpoint;

|

||||

|

||||

return (

|

||||

<div className="grid grid-cols-5 gap-6">

|

||||

<div className="col-span-5 flex flex-col items-center justify-start gap-6 sm:col-span-3">

|

||||

|

|

@ -120,6 +134,22 @@ export default function Settings({ conversation, setOption, models, readonly }:

|

|||

)}

|

||||

/>

|

||||

</div>

|

||||

<div className="grid w-full items-start gap-2">

|

||||

<DynamicTags

|

||||

settingKey="stop"

|

||||

setOption={setOption}

|

||||

label="com_endpoint_stop"

|

||||

labelCode={true}

|

||||

description="com_endpoint_openai_stop"

|

||||

descriptionCode={true}

|

||||

placeholder="com_endpoint_stop_placeholder"

|

||||

placeholderCode={true}

|

||||

descriptionSide="right"

|

||||

maxTags={isOpenAI ? 4 : undefined}

|

||||

conversation={conversation}

|

||||

readonly={readonly}

|

||||

/>

|

||||

</div>

|

||||

</div>

|

||||

<div className="col-span-5 flex flex-col items-center justify-start gap-6 px-3 sm:col-span-2">

|

||||

<HoverCard openDelay={300}>

|

||||

|

|

@ -133,9 +163,10 @@ export default function Settings({ conversation, setOption, models, readonly }:

|

|||

</Label>

|

||||

<InputNumber

|

||||

id="temp-int"

|

||||

stringMode={false}

|

||||

disabled={readonly}

|

||||

value={temperatureValue as number}

|

||||

onChange={setTemperature}

|

||||

onChange={setTemperature as OnInputNumberChange}

|

||||

max={2}

|

||||

min={0}

|

||||

step={0.01}

|

||||

|

|

|

|||

|

|

@ -20,9 +20,10 @@ function DynamicCheckbox({

|

|||

showDefault = true,

|

||||

labelCode,

|

||||

descriptionCode,

|

||||

conversation,

|

||||

}: DynamicSettingProps) {

|

||||

const localize = useLocalize();

|

||||

const { conversation = { conversationId: null }, preset } = useChatContext();

|

||||

const { preset } = useChatContext();

|

||||

const [inputValue, setInputValue] = useState<boolean>(!!(defaultValue as boolean | undefined));

|

||||

|

||||

const selectedValue = useMemo(() => {

|

||||

|

|

|

|||

|

|

@ -22,9 +22,10 @@ function DynamicDropdown({

|

|||

showDefault = true,

|

||||

labelCode,

|

||||

descriptionCode,

|

||||

conversation,

|

||||

}: DynamicSettingProps) {

|

||||

const localize = useLocalize();

|

||||

const { conversation = { conversationId: null }, preset } = useChatContext();

|

||||

const { preset } = useChatContext();

|

||||

const [inputValue, setInputValue] = useState<string | null>(null);

|

||||

|

||||

const selectedValue = useMemo(() => {

|

||||

|

|

|

|||

|

|

@ -22,9 +22,10 @@ function DynamicInput({

|

|||

labelCode,

|

||||

descriptionCode,

|

||||

placeholderCode,

|

||||

conversation,

|

||||

}: DynamicSettingProps) {

|

||||

const localize = useLocalize();

|

||||

const { conversation = { conversationId: null }, preset } = useChatContext();

|

||||

const { preset } = useChatContext();

|

||||

|

||||

const [setInputValue, inputValue] = useDebouncedInput<string | null>({

|

||||

optionKey: optionType !== OptionTypes.Custom ? settingKey : undefined,

|

||||

|

|

|

|||

|

|

@ -23,9 +23,10 @@ function DynamicSlider({

|

|||

includeInput = true,

|

||||

labelCode,

|

||||

descriptionCode,

|

||||

conversation,

|

||||

}: DynamicSettingProps) {

|

||||

const localize = useLocalize();

|

||||

const { conversation = { conversationId: null }, preset } = useChatContext();

|

||||

const { preset } = useChatContext();

|

||||

const isEnum = useMemo(() => !range && options && options.length > 0, [options, range]);

|

||||

|

||||

const [setInputValue, inputValue] = useDebouncedInput<string | number>({

|

||||

|

|

|

|||

|

|

@ -19,9 +19,10 @@ function DynamicSwitch({

|

|||

showDefault = true,

|

||||

labelCode,

|

||||

descriptionCode,

|

||||

conversation,

|

||||

}: DynamicSettingProps) {

|

||||

const localize = useLocalize();

|

||||

const { conversation = { conversationId: null }, preset } = useChatContext();

|

||||

const { preset } = useChatContext();

|

||||

const [inputValue, setInputValue] = useState<boolean>(!!(defaultValue as boolean | undefined));

|

||||

useParameterEffects({

|

||||

preset,

|

||||

|

|

|

|||

193

client/src/components/SidePanel/Parameters/DynamicTags.tsx

Normal file

193

client/src/components/SidePanel/Parameters/DynamicTags.tsx

Normal file

|

|

@ -0,0 +1,193 @@

|

|||

// client/src/components/SidePanel/Parameters/DynamicTags.tsx

|

||||

import { useState, useMemo, useCallback, useRef } from 'react';

|

||||

import { OptionTypes } from 'librechat-data-provider';

|

||||

import type { DynamicSettingProps } from 'librechat-data-provider';

|

||||

import { Label, Input, HoverCard, HoverCardTrigger, Tag } from '~/components/ui';

|

||||

import { useChatContext, useToastContext } from '~/Providers';

|

||||

import { useLocalize, useParameterEffects } from '~/hooks';

|

||||

import { cn, defaultTextProps } from '~/utils';

|

||||

import OptionHover from './OptionHover';

|

||||

import { ESide } from '~/common';

|

||||

|

||||

function DynamicTags({

|

||||

label,

|

||||

settingKey,

|

||||

defaultValue = [],

|

||||

description,

|

||||

columnSpan,

|

||||

setOption,

|

||||

optionType,

|

||||

placeholder,

|

||||

readonly = false,

|

||||

showDefault = true,

|

||||

labelCode,

|

||||

descriptionCode,

|

||||

placeholderCode,

|

||||

descriptionSide = ESide.Left,

|

||||

conversation,

|

||||

minTags,

|

||||

maxTags,

|

||||

}: DynamicSettingProps) {

|

||||

const localize = useLocalize();

|

||||

const { preset } = useChatContext();

|

||||

const { showToast } = useToastContext();

|

||||

const inputRef = useRef<HTMLInputElement>(null);

|

||||

const [tagText, setTagText] = useState<string>('');

|

||||

const [tags, setTags] = useState<string[] | undefined>(

|

||||

(defaultValue as string[] | undefined) ?? [],

|

||||

);

|

||||

|

||||

const updateState = useCallback(

|

||||

(update: string[]) => {

|

||||

if (optionType === OptionTypes.Custom) {

|

||||

// TODO: custom logic, add to payload but not to conversation

|

||||

setTags(update);

|

||||

return;

|

||||

}

|

||||

setOption(settingKey)(update);

|

||||

},

|

||||

[optionType, setOption, settingKey],

|

||||

);

|

||||

|

||||

const onTagClick = useCallback(() => {

|

||||

if (inputRef.current) {

|

||||

inputRef.current.focus();

|

||||

}

|

||||

}, [inputRef]);

|

||||

|

||||

const currentTags: string[] | undefined = useMemo(() => {

|

||||

if (optionType === OptionTypes.Custom) {

|

||||

// TODO: custom logic, add to payload but not to conversation

|

||||

return tags;

|

||||

}

|

||||

|

||||

if (!conversation?.[settingKey]) {

|

||||

return defaultValue ?? [];

|

||||

}

|

||||

|

||||

return conversation?.[settingKey];

|

||||

}, [conversation, defaultValue, optionType, settingKey, tags]);

|

||||

|

||||

const onTagRemove = useCallback(

|

||||

(indexToRemove: number) => {

|

||||

if (!currentTags) {

|

||||

return;

|

||||

}

|

||||

|

||||

if (minTags && currentTags.length <= minTags) {

|

||||

showToast({

|

||||

message: localize('com_ui_min_tags', minTags + ''),

|

||||

status: 'warning',

|

||||

});

|

||||

return;

|

||||

}

|

||||

const update = currentTags.filter((_, index) => index !== indexToRemove);

|

||||

updateState(update);

|

||||

},

|

||||

[localize, minTags, currentTags, showToast, updateState],

|

||||

);

|

||||

|

||||

const onTagAdd = useCallback(() => {

|

||||

if (!tagText) {

|

||||

return;

|

||||

}

|

||||

|

||||

let update = [...(currentTags ?? []), tagText];

|

||||

if (maxTags && update.length > maxTags) {

|

||||

showToast({

|

||||

message: localize('com_ui_max_tags', maxTags + ''),

|

||||

status: 'warning',

|

||||

});

|

||||

update = update.slice(-maxTags);

|

||||

}

|

||||

updateState(update);

|

||||

setTagText('');

|

||||

}, [tagText, currentTags, updateState, maxTags, showToast, localize]);

|

||||

|

||||

useParameterEffects({

|

||||

preset,

|

||||

settingKey,

|

||||

defaultValue: typeof defaultValue === 'undefined' ? [] : defaultValue,

|

||||

inputValue: tags,

|

||||

setInputValue: setTags,

|

||||

preventDelayedUpdate: true,

|

||||

conversation,

|

||||

});

|

||||

|

||||

return (

|

||||

<div

|

||||

className={`flex flex-col items-center justify-start gap-6 ${

|

||||

columnSpan ? `col-span-${columnSpan}` : 'col-span-full'

|

||||

}`}

|

||||

>

|

||||

<HoverCard openDelay={300}>

|

||||

<HoverCardTrigger className="grid w-full items-center gap-2">

|

||||

<div className="flex w-full justify-between">

|

||||

<Label

|

||||

htmlFor={`${settingKey}-dynamic-input`}

|

||||

className="text-left text-sm font-medium"

|

||||

>

|

||||

{labelCode ? localize(label ?? '') || label : label ?? settingKey}{' '}

|

||||

{showDefault && (

|

||||

<small className="opacity-40">

|

||||

(

|

||||

{typeof defaultValue === 'undefined' || !(defaultValue as string)?.length

|

||||

? localize('com_endpoint_default_blank')

|

||||

: `${localize('com_endpoint_default')}: ${defaultValue}`}

|

||||

)

|

||||

</small>

|

||||

)}

|

||||

</Label>

|

||||

</div>

|

||||

<div>

|

||||

<div className="bg-muted mb-2 flex flex-wrap gap-1 break-all rounded-lg">

|

||||

{currentTags?.map((tag: string, index: number) => (

|

||||

<Tag

|

||||

key={`${tag}-${index}`}

|

||||

label={tag}

|

||||

onClick={onTagClick}

|

||||

onRemove={() => {

|

||||

onTagRemove(index);

|

||||

if (inputRef.current) {

|

||||

inputRef.current.focus();

|

||||

}

|

||||

}}

|

||||

/>

|

||||

))}

|

||||

<Input

|

||||

ref={inputRef}

|

||||

id={`${settingKey}-dynamic-input`}

|

||||

disabled={readonly}

|

||||

value={tagText}

|

||||

onKeyDown={(e) => {

|

||||

if (!currentTags) {

|

||||

return;

|

||||

}

|

||||

if (e.key === 'Backspace' && !tagText) {

|

||||

onTagRemove(currentTags.length - 1);

|

||||

}

|

||||

if (e.key === 'Enter') {

|

||||

onTagAdd();

|

||||

}

|

||||

}}

|

||||

onChange={(e) => setTagText(e.target.value)}

|

||||

placeholder={

|

||||

placeholderCode ? localize(placeholder ?? '') || placeholder : placeholder

|

||||

}

|

||||

className={cn(defaultTextProps, 'flex h-10 max-h-10 px-3 py-2')}

|

||||

/>

|

||||

</div>

|

||||

</div>

|

||||

</HoverCardTrigger>

|

||||

{description && (

|

||||

<OptionHover

|

||||

description={descriptionCode ? localize(description) || description : description}

|

||||

side={descriptionSide as ESide}

|

||||

/>

|

||||

)}

|

||||

</HoverCard>

|

||||

</div>

|

||||

);

|

||||

}

|

||||

|

||||

export default DynamicTags;

|

||||

|

|

@ -22,9 +22,10 @@ function DynamicTextarea({

|

|||

labelCode,

|

||||

descriptionCode,

|

||||

placeholderCode,

|

||||

conversation,

|

||||

}: DynamicSettingProps) {

|

||||

const localize = useLocalize();

|

||||

const { conversation = { conversationId: null }, preset } = useChatContext();

|

||||

const { preset } = useChatContext();

|

||||

|

||||

const [setInputValue, inputValue] = useDebouncedInput<string | null>({

|

||||

optionKey: optionType !== OptionTypes.Custom ? settingKey : undefined,

|

||||

|

|

|

|||

|

|

@ -5,12 +5,16 @@ import type {

|

|||

SettingsConfiguration,

|

||||

} from 'librechat-data-provider';

|

||||

import { useSetIndexOptions } from '~/hooks';

|

||||

import DynamicDropdown from './DynamicDropdown';

|

||||

import DynamicCheckbox from './DynamicCheckbox';

|

||||

import DynamicTextarea from './DynamicTextarea';

|

||||

import DynamicSlider from './DynamicSlider';

|

||||

import DynamicSwitch from './DynamicSwitch';

|

||||

import DynamicInput from './DynamicInput';

|

||||

import { useChatContext } from '~/Providers';

|

||||

import {

|

||||

DynamicDropdown,

|

||||

DynamicCheckbox,

|

||||

DynamicTextarea,

|

||||

DynamicSlider,

|

||||

DynamicSwitch,

|

||||

DynamicInput,

|

||||

DynamicTags,

|

||||

} from './';

|

||||

|

||||

const settingsConfiguration: SettingsConfiguration = [

|

||||

{

|

||||

|

|

@ -129,6 +133,22 @@ const settingsConfiguration: SettingsConfiguration = [

|

|||

showDefault: false,

|

||||

columnSpan: 2,

|

||||

},

|

||||

{

|

||||

key: 'stop',

|

||||

label: 'com_endpoint_stop',

|

||||

labelCode: true,

|

||||

description: 'com_endpoint_openai_stop',

|

||||

descriptionCode: true,

|

||||

placeholder: 'com_endpoint_stop_placeholder',

|

||||

placeholderCode: true,

|

||||

type: 'array',

|

||||

default: [],

|

||||

component: 'tags',

|

||||

optionType: 'conversation',

|

||||

columnSpan: 4,

|

||||

minTags: 1,

|

||||

maxTags: 4,

|

||||

},

|

||||

];

|

||||

|

||||

const componentMapping: Record<ComponentTypes, React.ComponentType<DynamicSettingProps>> = {

|

||||

|

|

@ -138,9 +158,11 @@ const componentMapping: Record<ComponentTypes, React.ComponentType<DynamicSettin

|

|||

[ComponentTypes.Textarea]: DynamicTextarea,

|

||||

[ComponentTypes.Input]: DynamicInput,

|

||||

[ComponentTypes.Checkbox]: DynamicCheckbox,

|

||||

[ComponentTypes.Tags]: DynamicTags,

|

||||

};

|

||||

|

||||

export default function Parameters() {

|

||||

const { conversation } = useChatContext();

|

||||

const { setOption } = useSetIndexOptions();

|

||||

|

||||

const temperature = settingsConfiguration.find(

|

||||

|

|

@ -173,6 +195,10 @@ export default function Parameters() {

|

|||

const Input = componentMapping[chatGptLabel.component];

|

||||

const { key: inputKey, default: inputDefault, ...inputSettings } = chatGptLabel;

|

||||

|

||||

const stop = settingsConfiguration.find((setting) => setting.key === 'stop') as SettingDefinition;

|

||||

const Tags = componentMapping[stop.component];

|

||||

const { key: stopKey, default: stopDefault, ...stopSettings } = stop;

|

||||

|

||||

return (

|

||||

<div className="h-auto max-w-full overflow-x-hidden p-3">

|

||||

<div className="grid grid-cols-4 gap-6">

|

||||

|

|

@ -184,30 +210,42 @@ export default function Parameters() {

|

|||

defaultValue={inputDefault}

|

||||

{...inputSettings}

|

||||

setOption={setOption}

|

||||

conversation={conversation}

|

||||

/>

|

||||

<Textarea

|

||||

settingKey={textareaKey}

|

||||

defaultValue={textareaDefault}

|

||||

{...textareaSettings}

|

||||

setOption={setOption}

|

||||

conversation={conversation}

|

||||

/>

|

||||

<TempComponent

|

||||

settingKey={temp}

|

||||

defaultValue={tempDefault}

|

||||

{...tempSettings}

|

||||

setOption={setOption}

|

||||

conversation={conversation}

|

||||

/>

|

||||

<Switch

|

||||

settingKey={switchKey}

|

||||

defaultValue={switchDefault}

|

||||

{...switchSettings}

|

||||

setOption={setOption}

|

||||

conversation={conversation}

|

||||

/>

|

||||

<DetailComponent

|

||||

settingKey={detail}

|

||||

defaultValue={detailDefault}

|

||||

{...detailSettings}

|

||||

setOption={setOption}

|

||||

conversation={conversation}

|

||||

/>

|

||||

<Tags

|

||||

settingKey={stopKey}

|

||||

defaultValue={stopDefault}

|

||||

{...stopSettings}

|

||||

setOption={setOption}

|

||||

conversation={conversation}

|

||||

/>

|

||||

</div>

|

||||

</div>

|

||||

|

|

|

|||

7

client/src/components/SidePanel/Parameters/index.ts

Normal file

7

client/src/components/SidePanel/Parameters/index.ts

Normal file

|

|

@ -0,0 +1,7 @@

|

|||

export { default as DynamicDropdown } from './DynamicDropdown';

|

||||

export { default as DynamicCheckbox } from './DynamicCheckbox';

|

||||

export { default as DynamicTextarea } from './DynamicTextarea';

|

||||

export { default as DynamicSlider } from './DynamicSlider';

|

||||

export { default as DynamicSwitch } from './DynamicSwitch';

|

||||

export { default as DynamicInput } from './DynamicInput';

|

||||

export { default as DynamicTags } from './DynamicTags';

|

||||

43

client/src/components/ui/Tag.tsx

Normal file

43

client/src/components/ui/Tag.tsx

Normal file

|

|

@ -0,0 +1,43 @@

|

|||

import * as React from 'react';

|

||||

import { X } from 'lucide-react';

|

||||

import { cn } from '~/utils';

|

||||

|

||||

type TagProps = React.ComponentPropsWithoutRef<'div'> & {

|

||||

label: string;

|

||||

labelClassName?: string;

|

||||

CancelButton?: React.ReactNode;

|

||||

onRemove: (e: React.MouseEvent<HTMLButtonElement>) => void;

|

||||

};

|

||||

|

||||

const TagPrimitiveRoot = React.forwardRef<HTMLDivElement, TagProps>(

|

||||

({ CancelButton, label, onRemove, className = '', labelClassName = '', ...props }, ref) => (

|

||||

<div

|

||||

ref={ref}

|

||||

{...props}

|

||||

className={cn(

|

||||

'flex max-h-8 items-center overflow-y-hidden rounded rounded-3xl border-2 border-green-600 bg-green-600/20 text-sm text-xs text-white',

|

||||

className,

|

||||

)}

|

||||

>

|

||||

<div className={cn('ml-1 whitespace-pre-wrap px-2 py-1', labelClassName)}>{label}</div>

|

||||

{CancelButton ? (

|

||||

CancelButton

|

||||

) : (

|

||||

<button

|

||||

onClick={(e) => {

|

||||

e.stopPropagation();

|

||||

onRemove(e);

|

||||

}}

|

||||

className="rounded-full bg-green-600/50"

|

||||

aria-label={`Remove ${label}`}

|

||||

>

|

||||

<X className="m-[1.5px] p-1" />

|

||||

</button>

|

||||

)}

|

||||

</div>

|

||||

),

|

||||

);

|

||||

|

||||

TagPrimitiveRoot.displayName = 'Tag';

|

||||

|

||||

export const Tag = React.memo(TagPrimitiveRoot);

|

||||

|

|

@ -16,6 +16,7 @@ export * from './Separator';

|

|||

export * from './Switch';

|

||||

export * from './Table';

|

||||

export * from './Tabs';

|

||||

export * from './Tag';

|

||||

export * from './Templates';

|

||||

export * from './Textarea';

|

||||

export * from './TextareaAutosize';

|

||||

|

|

|

|||

|

|

@ -12,7 +12,7 @@ function useParameterEffects<T = unknown>({

|

|||

preventDelayedUpdate = false,

|

||||

}: Pick<DynamicSettingProps, 'settingKey' | 'defaultValue'> & {

|

||||

preset: TPreset | null;

|

||||

conversation: TConversation | { conversationId: null } | null;

|

||||

conversation?: TConversation | TPreset | null;

|

||||

inputValue: T;

|

||||

setInputValue: (inputValue: T) => void;

|

||||

preventDelayedUpdate?: boolean;

|

||||

|

|

|

|||

|

|

@ -155,6 +155,8 @@ export default {

|

|||

'Uploading "{0}" is taking more time than anticipated. Please wait while the file finishes indexing for retrieval.',

|

||||

com_ui_privacy_policy: 'Privacy policy',

|

||||

com_ui_terms_of_service: 'Terms of service',

|

||||

com_ui_min_tags: 'Cannot remove more values, a minimum of {0} are required.',

|

||||

com_ui_max_tags: 'Maximum number allowed is {0}, using latest values.',

|

||||

com_auth_error_login:

|

||||

'Unable to login with the information provided. Please check your credentials and try again.',

|

||||

com_auth_error_login_rl:

|

||||

|

|

@ -257,6 +259,8 @@ export default {

|

|||

com_endpoint_top_p: 'Top P',

|

||||

com_endpoint_top_k: 'Top K',

|

||||

com_endpoint_max_output_tokens: 'Max Output Tokens',

|

||||

com_endpoint_stop: 'Stop Sequences',

|

||||

com_endpoint_stop_placeholder: 'Separate values by pressing `Enter`',

|

||||

com_endpoint_openai_temp:

|

||||

'Higher values = more random, while lower values = more focused and deterministic. We recommend altering this or Top P but not both.',

|

||||

com_endpoint_openai_max:

|

||||

|

|

@ -273,6 +277,7 @@ export default {

|

|||

'Resend all previously attached files. Note: this will increase token cost and you may experience errors with many attachments.',

|

||||

com_endpoint_openai_detail:

|

||||

'The resolution for Vision requests. "Low" is cheaper and faster, "High" is more detailed and expensive, and "Auto" will automatically choose between the two based on the image resolution.',

|

||||

com_endpoint_openai_stop: 'Up to 4 sequences where the API will stop generating further tokens.',

|

||||

com_endpoint_openai_custom_name_placeholder: 'Set a custom name for the AI',

|

||||

com_endpoint_openai_prompt_prefix_placeholder:

|

||||

'Set custom instructions to include in System Message. Default: none',

|

||||

|

|

|

|||

|

|

@ -54,7 +54,10 @@ export default function ChatRoute() {

|

|||

!modelsQuery.data?.initial &&

|

||||

!hasSetConversation.current

|

||||

) {

|

||||

newConversation({ modelsData: modelsQuery.data });

|

||||

newConversation({

|

||||

modelsData: modelsQuery.data,

|

||||

template: conversation ? conversation : undefined,

|

||||

});

|

||||

hasSetConversation.current = !!assistants;

|

||||

} else if (

|

||||

initialConvoQuery.data &&

|

||||

|

|

@ -77,7 +80,10 @@ export default function ChatRoute() {

|

|||

conversationId === 'new' &&

|

||||

assistants

|

||||

) {

|

||||

newConversation({ modelsData: modelsQuery.data });

|

||||

newConversation({

|

||||

modelsData: modelsQuery.data,

|

||||

template: conversation ? conversation : undefined,

|

||||

});

|

||||

hasSetConversation.current = true;

|

||||

} else if (!hasSetConversation.current && !modelsQuery.data?.initial && assistants) {

|

||||

newConversation({

|

||||

|

|

@ -88,7 +94,7 @@ export default function ChatRoute() {

|

|||

});

|

||||

hasSetConversation.current = true;

|

||||

}

|

||||

/* Creates infinite render if all dependencies included */

|

||||

/* Creates infinite render if all dependencies included due to newConversation invocations exceeding call stack before hasSetConversation.current becomes truthy */

|

||||

// eslint-disable-next-line react-hooks/exhaustive-deps

|

||||

}, [initialConvoQuery.data, endpointsQuery.data, modelsQuery.data, assistants]);

|

||||

|

||||

|

|

|

|||

|

|

@ -260,7 +260,8 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

|

||||

- API is strict with unrecognized parameters and errors are not descriptive (usually "no body")

|

||||

|

||||

- The use of [`dropParams`](./custom_config.md#dropparams) to drop "stop", "user", "frequency_penalty", "presence_penalty" params is required.

|

||||

- The use of [`dropParams`](./custom_config.md#dropparams) to drop "user", "frequency_penalty", "presence_penalty" params is required.

|

||||

- `stop` is no longer included as a default parameter, so there is no longer a need to include it in [`dropParams`](./custom_config.md#dropparams), unless you would like to completely prevent users from configuring this field.

|

||||

|

||||

- Allows fetching the models list, but be careful not to use embedding models for chat.

|

||||

|

||||

|

|

@ -289,6 +290,8 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

- **Known:** icon provided.

|

||||

- **Known issue:** fetching list of models is not supported. See [Pull Request 2728](https://github.com/ollama/ollama/pull/2728).

|

||||

- Download models with ollama run command. See [Ollama Library](https://ollama.com/library)

|

||||

- It's recommend to use the value "current_model" for the `titleModel` to avoid loading more than 1 model per conversation.

|

||||

- Doing so will dynamically use the current conversation model for the title generation.

|

||||

- The example includes a top 5 popular model list from the Ollama Library, which was last updated on March 1, 2024, for your convenience.

|

||||

|

||||

```yaml

|

||||

|

|

@ -306,16 +309,18 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

]

|

||||

fetch: false # fetching list of models is not supported

|

||||

titleConvo: true

|

||||

titleModel: "llama2"

|

||||

titleModel: "current_model"

|

||||

summarize: false

|

||||

summaryModel: "llama2"

|

||||

summaryModel: "current_model"

|

||||

forcePrompt: false

|

||||

modelDisplayLabel: "Ollama"

|

||||

```

|

||||

|

||||

!!! tip "Ollama -> llama3"

|

||||

|

||||

To prevent the behavior where llama3 does not stop generating, add this `addParams` block to the config:

|

||||

Note: Once `stop` was removed from the [default parameters](./custom_config.md#default-parameters), the issue highlighted below should no longer exist.

|

||||

|

||||

However, in case you experience the behavior where `llama3` does not stop generating, add this `addParams` block to the config:

|

||||

|

||||

```yaml

|

||||

- name: "Ollama"

|

||||

|

|

@ -327,9 +332,9 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

]

|

||||

fetch: false # fetching list of models is not supported

|

||||

titleConvo: true

|

||||

titleModel: "llama3"

|

||||

titleModel: "current_model"

|

||||

summarize: false

|

||||

summaryModel: "llama3"

|

||||

summaryModel: "current_model"

|

||||

forcePrompt: false

|

||||

modelDisplayLabel: "Ollama"

|

||||

addParams:

|

||||

|

|

@ -341,6 +346,31 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

]

|

||||

```

|

||||

|

||||

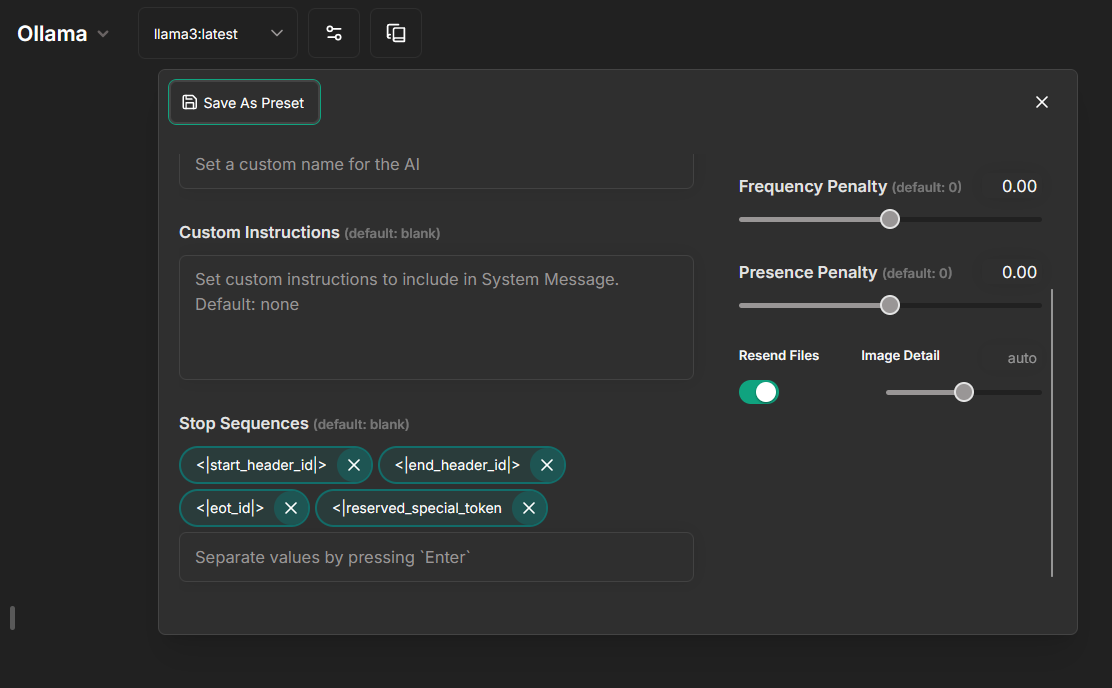

If you are only using `llama3` with **Ollama**, it's fine to set the `stop` parameter at the config level via `addParams`.

|

||||

|

||||

However, if you are using multiple models, it's now recommended to add stop sequences from the frontend via conversation parameters and presets.

|

||||

|

||||

For example, we can omit `addParams`:

|

||||

|

||||

```yaml

|

||||

- name: "Ollama"

|

||||

apiKey: "ollama"

|

||||

baseURL: "http://host.docker.internal:11434/v1/"

|

||||

models:

|

||||

default: [

|

||||

"llama3:latest",

|

||||

"mistral"

|

||||

]

|

||||

fetch: false # fetching list of models is not supported

|

||||

titleConvo: true

|

||||

titleModel: "current_model"

|

||||

modelDisplayLabel: "Ollama"

|

||||

```

|

||||

|

||||

And use these settings (best to also save it):

|

||||

|

||||

|

||||

|

||||

## Openrouter

|

||||

> OpenRouter API key: [openrouter.ai/keys](https://openrouter.ai/keys)

|

||||

|

||||

|

|

@ -348,7 +378,7 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

|

||||

- **Known:** icon provided, fetching list of models is recommended as API token rates and pricing used for token credit balances when models are fetched.

|

||||

|

||||

- It's recommended, and for some models required, to use [`dropParams`](./custom_config.md#dropparams) to drop the `stop` parameter as Openrouter models use a variety of stop tokens.

|

||||

- `stop` is no longer included as a default parameter, so there is no longer a need to include it in [`dropParams`](./custom_config.md#dropparams), unless you would like to completely prevent users from configuring this field.

|

||||

|

||||

- **Known issue:** you should not use `OPENROUTER_API_KEY` as it will then override the `openAI` endpoint to use OpenRouter as well.

|

||||

|

||||

|

|

|

|||

|

|

@ -159,7 +159,8 @@ For your convenience, these are the latest models as of 4/15/24 that can be used

|

|||

GOOGLE_MODELS=gemini-1.0-pro,gemini-1.0-pro-001,gemini-1.0-pro-latest,gemini-1.0-pro-vision-latest,gemini-1.5-pro-latest,gemini-pro,gemini-pro-vision

|

||||

```

|

||||

|

||||

Notes:

|

||||

**Notes:**

|

||||

|

||||

- A gemini-pro model or `gemini-pro-vision` are required in your list for attaching images.

|

||||

- Using LibreChat, PaLM2 and Codey models can only be accessed through Vertex AI, not the Generative Language API.

|

||||

- Only models that support the `generateContent` method can be used natively with LibreChat + the Gen AI API.

|

||||

|

|

|

|||

|

|

@ -92,7 +92,7 @@ To properly integrate Azure OpenAI with LibreChat, specific fields must be accur

|

|||

|

||||

These settings apply globally to all Azure models and groups within the endpoint. Here are the available fields:

|

||||

|

||||

1. **titleModel** (String, Optional): Specifies the model to use for generating conversation titles. If not provided, the default model is set as `gpt-3.5-turbo`, which will result in no titles if lacking this model.

|

||||

1. **titleModel** (String, Optional): Specifies the model to use for generating conversation titles. If not provided, the default model is set as `gpt-3.5-turbo`, which will result in no titles if lacking this model. You can also set this to dynamically use the current model by setting it to `current_model`.

|

||||

|

||||

2. **plugins** (Boolean, Optional): Enables the use of plugins through Azure. Set to `true` to activate Plugins endpoint support through your Azure config. Default: `false`.

|

||||

|

||||

|

|

@ -398,8 +398,11 @@ endpoints:

|

|||

titleModel: "gpt-3.5-turbo"

|

||||

```

|

||||

|

||||

**Note**: "gpt-3.5-turbo" is the default value, so you can omit it if you want to use this exact model and have it configured. If not configured and `titleConvo` is set to `true`, the titling process will result in an error and no title will be generated.

|

||||

**Note**: "gpt-3.5-turbo" is the default value, so you can omit it if you want to use this exact model and have it configured. If not configured and `titleConvo` is set to `true`, the titling process will result in an error and no title will be generated. You can also set this to dynamically use the current model by setting it to `current_model`.

|

||||

|

||||

```yaml

|

||||

titleModel: "current_model"

|

||||

```

|

||||

|

||||

### Using GPT-4 Vision with Azure

|

||||

|

||||

|

|

|

|||

|

|

@ -24,7 +24,9 @@ Stay tuned for ongoing enhancements to customize your LibreChat instance!

|

|||

|

||||

## Compatible Endpoints

|

||||

|

||||

Any API designed to be compatible with OpenAI's should be supported, but here is a list of **[known compatible endpoints](./ai_endpoints.md) including example setups.**

|

||||

Any API designed to be compatible with OpenAI's should be supported

|

||||

|

||||

Here is a list of **[known compatible endpoints](./ai_endpoints.md) including example setups.**

|

||||

|

||||

## Setup

|

||||

|

||||

|

|

@ -665,6 +667,7 @@ endpoints:

|

|||

- Type: String

|

||||

- Example: `titleModel: "mistral-tiny"`

|

||||

- **Note**: Defaults to "gpt-3.5-turbo" if omitted. May cause issues if "gpt-3.5-turbo" is not available.

|

||||

- **Note**: You can also dynamically use the current conversation model by setting it to "current_model".

|

||||

|

||||

### **summarize**:

|

||||

|

||||

|

|

@ -752,11 +755,6 @@ Custom endpoints share logic with the OpenAI endpoint, and thus have default par

|

|||

"top_p": 1,

|

||||

"presence_penalty": 0,

|

||||

"frequency_penalty": 0,

|

||||

"stop": [

|

||||

"||>",

|

||||

"\nUser:",

|

||||

"<|diff_marker|>",

|

||||

],

|

||||

"user": "LibreChat_User_ID",

|

||||

"stream": true,

|

||||

"messages": [

|

||||

|

|

@ -773,7 +771,6 @@ Custom endpoints share logic with the OpenAI endpoint, and thus have default par

|

|||

- `top_p`: Defaults to `1` if not provided via preset,

|

||||

- `presence_penalty`: Defaults to `0` if not provided via preset,

|

||||

- `frequency_penalty`: Defaults to `0` if not provided via preset,

|

||||

- `stop`: Sequences where the AI will stop generating further tokens. By default, uses the start token (`||>`), the user label (`\nUser:`), and end token (`<|diff_marker|>`). Up to 4 sequences can be provided to the [OpenAI API](https://platform.openai.com/docs/api-reference/chat/create#chat-create-stop)

|

||||

- `user`: A unique identifier representing your end-user, which can help OpenAI to [monitor and detect abuse](https://platform.openai.com/docs/api-reference/chat/create#chat-create-user).

|

||||

- `stream`: If set, partial message deltas will be sent, like in ChatGPT. Otherwise, generation will only be available when completed.

|

||||

- `messages`: [OpenAI format for messages](https://platform.openai.com/docs/api-reference/chat/create#chat-create-messages); the `name` field is added to messages with `system` and `assistant` roles when a custom name is specified via preset.

|

||||

|

|

|

|||

|

|

@ -1,6 +1,6 @@

|

|||

{

|

||||

"name": "librechat-data-provider",

|

||||

"version": "0.5.7",

|

||||

"version": "0.5.8",

|

||||

"description": "data services for librechat apps",

|

||||

"main": "dist/index.js",

|

||||

"module": "dist/index.es.js",

|

||||

|

|

|

|||

|

|

@ -114,6 +114,54 @@ describe('generateDynamicSchema', () => {

|

|||

|

||||

expect(result.success).toBeFalsy();

|

||||

});

|

||||

|

||||

it('should generate a schema for array settings', () => {

|

||||

const settings: SettingsConfiguration = [

|

||||

{

|

||||

key: 'testArray',

|

||||

description: 'A test array setting',

|

||||

type: 'array',

|

||||

default: ['default', 'values'],

|

||||

component: 'tags', // Assuming 'tags' imply an array of strings

|

||||

optionType: OptionTypes.Custom,

|

||||

columnSpan: 3,

|

||||

label: 'Test Array Tags',

|

||||

minTags: 1, // Minimum number of tags

|

||||

maxTags: 5, // Maximum number of tags

|

||||

},

|

||||

];

|

||||

|

||||

const schema = generateDynamicSchema(settings);

|

||||

// Testing with right number of tags

|

||||

let result = schema.safeParse({ testArray: ['value1', 'value2', 'value3'] });

|

||||

|

||||

expect(result.success).toBeTruthy();

|

||||

expect(result?.['data']).toEqual({ testArray: ['value1', 'value2', 'value3'] });

|

||||

|

||||

// Testing with too few tags (should fail)

|

||||

result = schema.safeParse({ testArray: [] }); // Assuming minTags is 1, empty array should fail

|

||||

expect(result.success).toBeFalsy();

|

||||

if (!result.success) {

|

||||

// Additional check to ensure the failure is because of the minTags condition

|

||||

const issues = result.error.issues.filter(

|

||||

(issue) => issue.path.includes('testArray') && issue.code === 'too_small',

|

||||

);

|

||||

expect(issues.length).toBeGreaterThan(0); // Ensure there is at least one issue related to 'testArray' being too small

|

||||

}

|

||||

|

||||

// Testing with too many tags (should fail)

|

||||

result = schema.safeParse({

|

||||

testArray: ['value1', 'value2', 'value3', 'value4', 'value5', 'value6'],

|

||||

}); // Assuming maxTags is 5, this should fail

|

||||

expect(result.success).toBeFalsy();

|

||||

if (!result.success) {

|

||||

// Additional check to ensure the failure is because of the maxTags condition

|

||||

const issues = result.error.issues.filter(

|

||||

(issue) => issue.path.includes('testArray') && issue.code === 'too_big',

|

||||

);

|

||||

expect(issues.length).toBeGreaterThan(0); // Ensure there is at least one issue related to 'testArray' being too big

|

||||

}

|

||||

});

|

||||

});

|

||||

|

||||

describe('validateSettingDefinitions', () => {

|

||||

|

|

@ -368,6 +416,71 @@ describe('validateSettingDefinitions', () => {

|

|||

|

||||

expect(settings[0].default).toBe(50); // Expects default to be midpoint of range

|

||||

});

|

||||

|

||||

// Test for validating minTags and maxTags constraints

|

||||

test('should validate minTags and maxTags constraints', () => {

|

||||

const settingsWithTagsConstraints: SettingsConfiguration = [

|

||||

{

|

||||

key: 'selectedTags',

|

||||

component: 'tags',

|

||||

type: 'array',

|

||||

default: ['tag1'], // Only one tag by default

|

||||

minTags: 2, // Requires at least 2 tags, which should cause validation to fail

|

||||

maxTags: 4,

|

||||

optionType: OptionTypes.Custom,

|

||||

},

|

||||

];

|

||||

|

||||

expect(() => validateSettingDefinitions(settingsWithTagsConstraints)).toThrow(ZodError);

|

||||

});

|

||||

|

||||

// Test for ensuring default values for tags are arrays

|

||||

test('should ensure default values for tags are arrays', () => {

|

||||

const settingsWithInvalidDefaultForTags: SettingsConfiguration = [

|

||||

{

|

||||

key: 'favoriteTags',

|

||||

component: 'tags',

|

||||

type: 'array',

|

||||

default: 'notAnArray', // Incorrect default type

|

||||

optionType: OptionTypes.Custom,

|

||||

},

|

||||

];

|

||||

|

||||

expect(() => validateSettingDefinitions(settingsWithInvalidDefaultForTags)).toThrow(ZodError);

|

||||

});

|

||||

|

||||

// Test for array settings without default values should not throw if constraints are satisfied

|

||||

test('array settings without defaults should not throw if constraints are met', () => {

|

||||

const settingsWithNoDefaultButValidTags: SettingsConfiguration = [

|

||||

{

|

||||

key: 'userTags',

|

||||

component: 'tags',

|

||||

type: 'array',

|

||||

minTags: 1, // Requires at least 1 tag

|

||||

maxTags: 5, // Allows up to 5 tags

|

||||

optionType: OptionTypes.Custom,

|

||||

},

|

||||

];

|

||||

|

||||

// No default is set, but since the constraints are potentially met (depends on user input), this should not throw

|

||||

expect(() => validateSettingDefinitions(settingsWithNoDefaultButValidTags)).not.toThrow();

|

||||

});

|

||||

|

||||

// Test for ensuring maxTags is respected in default array values

|

||||

test('should ensure maxTags is respected for default array values', () => {

|

||||

const settingsExceedingMaxTags: SettingsConfiguration = [

|

||||

{

|

||||

key: 'interestTags',

|

||||

component: 'tags',

|

||||

type: 'array',

|

||||

default: ['music', 'movies', 'books', 'travel', 'cooking', 'sports'], // 6 tags

|

||||

maxTags: 5, // Exceeds the maxTags limit

|

||||

optionType: OptionTypes.Custom,

|

||||

},

|

||||

];

|

||||

|

||||

expect(() => validateSettingDefinitions(settingsExceedingMaxTags)).toThrow(ZodError);

|

||||

});

|

||||

});

|

||||

|

||||

const settingsConfiguration: SettingsConfiguration = [

|

||||

|

|

|

|||

|

|

@ -616,7 +616,7 @@ export enum Constants {

|

|||

/**

|

||||

* Key for the Custom Config's version (librechat.yaml).

|

||||

*/

|

||||

CONFIG_VERSION = '1.0.6',

|

||||

CONFIG_VERSION = '1.0.7',

|

||||

/**

|

||||

* Standard value for the first message's `parentMessageId` value, to indicate no parent exists.

|

||||

*/

|

||||

|

|

@ -625,6 +625,10 @@ export enum Constants {

|

|||

* Fixed, encoded domain length for Azure OpenAI Assistants Function name parsing.

|

||||

*/

|

||||

ENCODED_DOMAIN_LENGTH = 10,

|

||||

/**

|

||||

* Identifier for using current_model in multi-model requests.

|

||||

*/

|

||||

CURRENT_MODEL = 'current_model',

|

||||

}

|

||||

|

||||

/**

|

||||

|

|

|

|||

|

|

@ -1,12 +1,19 @@

|

|||

import { z, ZodError, ZodIssueCode } from 'zod';

|

||||

import { z, ZodArray, ZodError, ZodIssueCode } from 'zod';

|

||||

import { tConversationSchema, googleSettings as google, openAISettings as openAI } from './schemas';

|

||||

import type { ZodIssue } from 'zod';

|

||||

import type { TConversation, TSetOption } from './schemas';

|

||||

import type { TConversation, TSetOption, TPreset } from './schemas';

|

||||

|

||||

export type GoogleSettings = Partial<typeof google>;

|

||||

export type OpenAISettings = Partial<typeof google>;

|

||||

|

||||

export type ComponentType = 'input' | 'textarea' | 'slider' | 'checkbox' | 'switch' | 'dropdown';

|

||||

export type ComponentType =

|

||||

| 'input'

|

||||

| 'textarea'

|

||||

| 'slider'

|

||||

| 'checkbox'

|

||||

| 'switch'

|

||||

| 'dropdown'

|

||||

| 'tags';

|

||||

|

||||

export type OptionType = 'conversation' | 'model' | 'custom';

|

||||

|

||||

|

|

@ -17,6 +24,15 @@ export enum ComponentTypes {

|

|||

Checkbox = 'checkbox',

|

||||

Switch = 'switch',

|

||||

Dropdown = 'dropdown',

|

||||

Tags = 'tags',

|

||||

}

|

||||

|

||||

export enum SettingTypes {

|

||||

Number = 'number',

|

||||

Boolean = 'boolean',

|

||||

String = 'string',

|

||||

Enum = 'enum',

|

||||

Array = 'array',

|

||||

}

|

||||

|

||||

export enum OptionTypes {

|

||||

|

|

@ -27,8 +43,8 @@ export enum OptionTypes {

|

|||

export interface SettingDefinition {

|

||||

key: string;

|

||||

description?: string;

|

||||

type: 'number' | 'boolean' | 'string' | 'enum';

|

||||

default?: number | boolean | string;

|

||||

type: 'number' | 'boolean' | 'string' | 'enum' | 'array';

|

||||

default?: number | boolean | string | string[];

|

||||

showDefault?: boolean;

|

||||

options?: string[];

|

||||

range?: SettingRange;

|

||||

|

|

@ -44,14 +60,18 @@ export interface SettingDefinition {

|

|||

descriptionCode?: boolean;

|

||||

minText?: number;

|

||||

maxText?: number;

|

||||

minTags?: number; // Specific to tags component

|

||||

maxTags?: number; // Specific to tags component

|

||||

includeInput?: boolean; // Specific to slider component

|

||||

descriptionSide?: 'top' | 'right' | 'bottom' | 'left';

|

||||

}

|

||||

|

||||

export type DynamicSettingProps = Partial<SettingDefinition> & {

|

||||

readonly?: boolean;

|

||||

settingKey: string;

|

||||

setOption: TSetOption;

|

||||

defaultValue?: number | boolean | string;

|

||||

conversation: TConversation | TPreset | null;

|

||||

defaultValue?: number | boolean | string | string[];

|

||||

};

|

||||

|

||||

const requiredSettingFields = ['key', 'type', 'component'];

|

||||

|

|

@ -68,9 +88,19 @@ export function generateDynamicSchema(settings: SettingsConfiguration) {

|

|||

const schemaFields: { [key: string]: z.ZodTypeAny } = {};

|

||||

|

||||

for (const setting of settings) {

|

||||

const { key, type, default: defaultValue, range, options, minText, maxText } = setting;

|

||||

const {

|

||||

key,

|

||||

type,

|

||||

default: defaultValue,

|

||||

range,

|

||||

options,

|

||||

minText,

|

||||

maxText,

|

||||

minTags,

|

||||

maxTags,

|

||||

} = setting;

|

||||

|

||||

if (type === 'number') {

|

||||

if (type === SettingTypes.Number) {

|

||||

let schema = z.number();

|

||||

if (range) {

|

||||

schema = schema.min(range.min);

|

||||

|

|

@ -84,7 +114,7 @@ export function generateDynamicSchema(settings: SettingsConfiguration) {

|

|||

continue;

|

||||

}

|

||||

|

||||

if (type === 'boolean') {

|

||||

if (type === SettingTypes.Boolean) {

|

||||

const schema = z.boolean();

|

||||

if (typeof defaultValue === 'boolean') {

|

||||

schemaFields[key] = schema.default(defaultValue);

|

||||

|

|

@ -94,7 +124,7 @@ export function generateDynamicSchema(settings: SettingsConfiguration) {

|

|||

continue;

|

||||

}

|

||||

|

||||

if (type === 'string') {

|

||||

if (type === SettingTypes.String) {

|

||||

let schema = z.string();

|

||||

if (minText) {

|

||||

schema = schema.min(minText);

|

||||

|

|

@ -110,7 +140,7 @@ export function generateDynamicSchema(settings: SettingsConfiguration) {

|

|||

continue;

|

||||

}

|

||||

|

||||

if (type === 'enum') {

|

||||

if (type === SettingTypes.Enum) {

|

||||

if (!options || options.length === 0) {

|

||||

console.warn(`Missing or empty 'options' for enum setting '${key}'.`);

|

||||

continue;

|

||||

|

|

@ -125,6 +155,23 @@ export function generateDynamicSchema(settings: SettingsConfiguration) {

|

|||

continue;

|

||||

}

|

||||

|

||||

if (type === SettingTypes.Array) {

|

||||

let schema: z.ZodSchema = z.array(z.string().or(z.number()));

|

||||

if (minTags && schema instanceof ZodArray) {

|

||||

schema = schema.min(minTags);

|

||||

}

|

||||

if (maxTags && schema instanceof ZodArray) {

|

||||

schema = schema.max(maxTags);

|

||||

}

|

||||

|

||||

if (defaultValue && Array.isArray(defaultValue)) {

|

||||

schema = schema.default(defaultValue);

|

||||

}

|

||||

|

||||

schemaFields[key] = schema;

|

||||

continue;

|

||||

}

|

||||

|

||||

console.warn(`Unsupported setting type: ${type}`);

|

||||

}

|

||||

|

||||

|

|

@ -178,17 +225,75 @@ export function validateSettingDefinitions(settings: SettingsConfiguration): voi

|

|||

}

|

||||

|

||||

// check accepted types

|

||||

if (!['number', 'boolean', 'string', 'enum'].includes(setting.type)) {

|

||||

const settingTypes = Object.values(SettingTypes);

|

||||

if (!settingTypes.includes(setting.type as SettingTypes)) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Invalid type for setting ${setting.key}. Must be one of 'number', 'boolean', 'string', 'enum'.`,

|

||||

message: `Invalid type for setting ${setting.key}. Must be one of ${settingTypes.join(

|

||||

', ',

|

||||

)}.`,

|

||||

path: ['type'],

|

||||

});

|

||||

}

|

||||

|

||||

// Predefined constraints based on components

|

||||

if (setting.component === 'input' || setting.component === 'textarea') {

|

||||

if (setting.type === 'number' && setting.component === 'textarea') {

|

||||

if (

|

||||

(setting.component === ComponentTypes.Tags && setting.type !== SettingTypes.Array) ||

|

||||

(setting.component !== ComponentTypes.Tags && setting.type === SettingTypes.Array)

|

||||

) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Tags component for setting ${setting.key} must have type array.`,

|

||||

path: ['type'],

|

||||

});

|

||||

}

|

||||

|

||||

if (setting.component === ComponentTypes.Tags) {

|

||||

if (setting.minTags !== undefined && setting.minTags < 0) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Invalid minTags value for setting ${setting.key}. Must be non-negative.`,

|

||||

path: ['minTags'],

|

||||

});

|

||||

}

|

||||

if (setting.maxTags !== undefined && setting.maxTags < 0) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Invalid maxTags value for setting ${setting.key}. Must be non-negative.`,

|

||||

path: ['maxTags'],

|

||||

});

|

||||

}

|

||||

if (setting.default && !Array.isArray(setting.default)) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Invalid default value for setting ${setting.key}. Must be an array.`,

|

||||

path: ['default'],

|

||||

});

|

||||

}

|

||||

if (setting.default && setting.maxTags && (setting.default as []).length > setting.maxTags) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Invalid default value for setting ${setting.key}. Must have at most ${setting.maxTags} tags.`,

|

||||

path: ['default'],

|

||||

});

|

||||

}

|

||||

if (setting.default && setting.minTags && (setting.default as []).length < setting.minTags) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Invalid default value for setting ${setting.key}. Must have at least ${setting.minTags} tags.`,

|

||||

path: ['default'],

|

||||

});

|

||||

}

|

||||

if (!setting.default) {

|

||||

setting.default = [];

|

||||

}

|

||||

}

|

||||

|

||||

if (

|

||||

setting.component === ComponentTypes.Input ||

|

||||

setting.component === ComponentTypes.Textarea

|

||||

) {

|

||||

if (setting.type === SettingTypes.Number && setting.component === ComponentTypes.Textarea) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Textarea component for setting ${setting.key} must have type string.`,

|

||||

|

|

@ -214,8 +319,8 @@ export function validateSettingDefinitions(settings: SettingsConfiguration): voi

|

|||

} // Default placeholder

|

||||

}

|

||||

|

||||

if (setting.component === 'slider') {

|

||||

if (setting.type === 'number' && !setting.range) {

|

||||

if (setting.component === ComponentTypes.Slider) {

|

||||

if (setting.type === SettingTypes.Number && !setting.range) {

|

||||

errors.push({

|

||||

code: ZodIssueCode.custom,

|

||||

message: `Slider component for setting ${setting.key} must have a range if type is number.`,

|

||||

|

|

@ -224,7 +329,7 @@ export function validateSettingDefinitions(settings: SettingsConfiguration): voi

|

|||

// continue;

|

||||

}

|

||||

if (

|

||||

setting.type === 'enum' &&

|

||||

setting.type === SettingTypes.Enum &&

|

||||

(!setting.options || setting.options.length < minSliderOptions)

|

||||