mirror of

https://github.com/danny-avila/LibreChat.git

synced 2026-01-22 18:26:12 +01:00

✋ feat: Stop Sequences for Conversations & Presets (#2536)

* feat: `stop` conversation parameter * feat: Tag primitive * feat: dynamic tags * refactor: update tag styling * feat: add stop sequences to OpenAI settings * fix(Presentation): prevent `SidePanel` re-renders that flicker side panel * refactor: use stop placeholder * feat: type and schema update for `stop` and `TPreset` in generation param related types * refactor: pass conversation to dynamic settings * refactor(OpenAIClient): remove default handling for `modelOptions.stop` * docs: fix Google AI Setup formatting * feat: current_model * docs: WIP update * fix(ChatRoute): prevent default preset override before `hasSetConversation.current` becomes true by including latest conversation state as template * docs: update docs with more info on `stop` * chore: bump config_version * refactor: CURRENT_MODEL handling

This commit is contained in:

parent

4121818124

commit

099aa9dead

29 changed files with 690 additions and 93 deletions

|

|

@ -260,8 +260,9 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

|

||||

- API is strict with unrecognized parameters and errors are not descriptive (usually "no body")

|

||||

|

||||

- The use of [`dropParams`](./custom_config.md#dropparams) to drop "stop", "user", "frequency_penalty", "presence_penalty" params is required.

|

||||

|

||||

- The use of [`dropParams`](./custom_config.md#dropparams) to drop "user", "frequency_penalty", "presence_penalty" params is required.

|

||||

- `stop` is no longer included as a default parameter, so there is no longer a need to include it in [`dropParams`](./custom_config.md#dropparams), unless you would like to completely prevent users from configuring this field.

|

||||

|

||||

- Allows fetching the models list, but be careful not to use embedding models for chat.

|

||||

|

||||

```yaml

|

||||

|

|

@ -289,6 +290,8 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

- **Known:** icon provided.

|

||||

- **Known issue:** fetching list of models is not supported. See [Pull Request 2728](https://github.com/ollama/ollama/pull/2728).

|

||||

- Download models with ollama run command. See [Ollama Library](https://ollama.com/library)

|

||||

- It's recommend to use the value "current_model" for the `titleModel` to avoid loading more than 1 model per conversation.

|

||||

- Doing so will dynamically use the current conversation model for the title generation.

|

||||

- The example includes a top 5 popular model list from the Ollama Library, which was last updated on March 1, 2024, for your convenience.

|

||||

|

||||

```yaml

|

||||

|

|

@ -306,16 +309,18 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

]

|

||||

fetch: false # fetching list of models is not supported

|

||||

titleConvo: true

|

||||

titleModel: "llama2"

|

||||

titleModel: "current_model"

|

||||

summarize: false

|

||||

summaryModel: "llama2"

|

||||

summaryModel: "current_model"

|

||||

forcePrompt: false

|

||||

modelDisplayLabel: "Ollama"

|

||||

```

|

||||

|

||||

!!! tip "Ollama -> llama3"

|

||||

|

||||

To prevent the behavior where llama3 does not stop generating, add this `addParams` block to the config:

|

||||

Note: Once `stop` was removed from the [default parameters](./custom_config.md#default-parameters), the issue highlighted below should no longer exist.

|

||||

|

||||

However, in case you experience the behavior where `llama3` does not stop generating, add this `addParams` block to the config:

|

||||

|

||||

```yaml

|

||||

- name: "Ollama"

|

||||

|

|

@ -327,9 +332,9 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

]

|

||||

fetch: false # fetching list of models is not supported

|

||||

titleConvo: true

|

||||

titleModel: "llama3"

|

||||

titleModel: "current_model"

|

||||

summarize: false

|

||||

summaryModel: "llama3"

|

||||

summaryModel: "current_model"

|

||||

forcePrompt: false

|

||||

modelDisplayLabel: "Ollama"

|

||||

addParams:

|

||||

|

|

@ -341,6 +346,31 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

]

|

||||

```

|

||||

|

||||

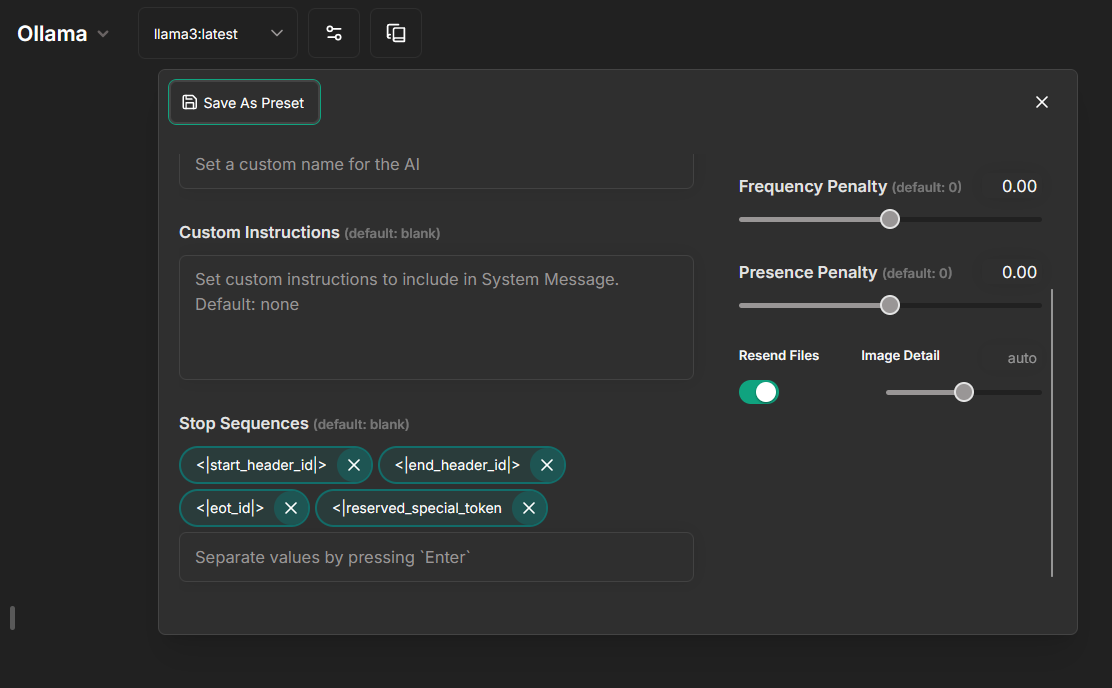

If you are only using `llama3` with **Ollama**, it's fine to set the `stop` parameter at the config level via `addParams`.

|

||||

|

||||

However, if you are using multiple models, it's now recommended to add stop sequences from the frontend via conversation parameters and presets.

|

||||

|

||||

For example, we can omit `addParams`:

|

||||

|

||||

```yaml

|

||||

- name: "Ollama"

|

||||

apiKey: "ollama"

|

||||

baseURL: "http://host.docker.internal:11434/v1/"

|

||||

models:

|

||||

default: [

|

||||

"llama3:latest",

|

||||

"mistral"

|

||||

]

|

||||

fetch: false # fetching list of models is not supported

|

||||

titleConvo: true

|

||||

titleModel: "current_model"

|

||||

modelDisplayLabel: "Ollama"

|

||||

```

|

||||

|

||||

And use these settings (best to also save it):

|

||||

|

||||

|

||||

|

||||

## Openrouter

|

||||

> OpenRouter API key: [openrouter.ai/keys](https://openrouter.ai/keys)

|

||||

|

||||

|

|

@ -348,7 +378,7 @@ Some of the endpoints are marked as **Known,** which means they might have speci

|

|||

|

||||

- **Known:** icon provided, fetching list of models is recommended as API token rates and pricing used for token credit balances when models are fetched.

|

||||

|

||||

- It's recommended, and for some models required, to use [`dropParams`](./custom_config.md#dropparams) to drop the `stop` parameter as Openrouter models use a variety of stop tokens.

|

||||

- `stop` is no longer included as a default parameter, so there is no longer a need to include it in [`dropParams`](./custom_config.md#dropparams), unless you would like to completely prevent users from configuring this field.

|

||||

|

||||

- **Known issue:** you should not use `OPENROUTER_API_KEY` as it will then override the `openAI` endpoint to use OpenRouter as well.

|

||||

|

||||

|

|

|

|||

|

|

@ -159,7 +159,8 @@ For your convenience, these are the latest models as of 4/15/24 that can be used

|

|||

GOOGLE_MODELS=gemini-1.0-pro,gemini-1.0-pro-001,gemini-1.0-pro-latest,gemini-1.0-pro-vision-latest,gemini-1.5-pro-latest,gemini-pro,gemini-pro-vision

|

||||

```

|

||||

|

||||

Notes:

|

||||

**Notes:**

|

||||

|

||||

- A gemini-pro model or `gemini-pro-vision` are required in your list for attaching images.

|

||||

- Using LibreChat, PaLM2 and Codey models can only be accessed through Vertex AI, not the Generative Language API.

|

||||

- Only models that support the `generateContent` method can be used natively with LibreChat + the Gen AI API.

|

||||

|

|

|

|||

|

|

@ -92,7 +92,7 @@ To properly integrate Azure OpenAI with LibreChat, specific fields must be accur

|

|||

|

||||

These settings apply globally to all Azure models and groups within the endpoint. Here are the available fields:

|

||||

|

||||

1. **titleModel** (String, Optional): Specifies the model to use for generating conversation titles. If not provided, the default model is set as `gpt-3.5-turbo`, which will result in no titles if lacking this model.

|

||||

1. **titleModel** (String, Optional): Specifies the model to use for generating conversation titles. If not provided, the default model is set as `gpt-3.5-turbo`, which will result in no titles if lacking this model. You can also set this to dynamically use the current model by setting it to `current_model`.

|

||||

|

||||

2. **plugins** (Boolean, Optional): Enables the use of plugins through Azure. Set to `true` to activate Plugins endpoint support through your Azure config. Default: `false`.

|

||||

|

||||

|

|

@ -398,8 +398,11 @@ endpoints:

|

|||

titleModel: "gpt-3.5-turbo"

|

||||

```

|

||||

|

||||

**Note**: "gpt-3.5-turbo" is the default value, so you can omit it if you want to use this exact model and have it configured. If not configured and `titleConvo` is set to `true`, the titling process will result in an error and no title will be generated.

|

||||

**Note**: "gpt-3.5-turbo" is the default value, so you can omit it if you want to use this exact model and have it configured. If not configured and `titleConvo` is set to `true`, the titling process will result in an error and no title will be generated. You can also set this to dynamically use the current model by setting it to `current_model`.

|

||||

|

||||

```yaml

|

||||

titleModel: "current_model"

|

||||

```

|

||||

|

||||

### Using GPT-4 Vision with Azure

|

||||

|

||||

|

|

|

|||

|

|

@ -24,7 +24,9 @@ Stay tuned for ongoing enhancements to customize your LibreChat instance!

|

|||

|

||||

## Compatible Endpoints

|

||||

|

||||

Any API designed to be compatible with OpenAI's should be supported, but here is a list of **[known compatible endpoints](./ai_endpoints.md) including example setups.**

|

||||

Any API designed to be compatible with OpenAI's should be supported

|

||||

|

||||

Here is a list of **[known compatible endpoints](./ai_endpoints.md) including example setups.**

|

||||

|

||||

## Setup

|

||||

|

||||

|

|

@ -665,6 +667,7 @@ endpoints:

|

|||

- Type: String

|

||||

- Example: `titleModel: "mistral-tiny"`

|

||||

- **Note**: Defaults to "gpt-3.5-turbo" if omitted. May cause issues if "gpt-3.5-turbo" is not available.

|

||||

- **Note**: You can also dynamically use the current conversation model by setting it to "current_model".

|

||||

|

||||

### **summarize**:

|

||||

|

||||

|

|

@ -752,11 +755,6 @@ Custom endpoints share logic with the OpenAI endpoint, and thus have default par

|

|||

"top_p": 1,

|

||||

"presence_penalty": 0,

|

||||

"frequency_penalty": 0,

|

||||

"stop": [

|

||||

"||>",

|

||||

"\nUser:",

|

||||

"<|diff_marker|>",

|

||||

],

|

||||

"user": "LibreChat_User_ID",

|

||||

"stream": true,

|

||||

"messages": [

|

||||

|

|

@ -773,7 +771,6 @@ Custom endpoints share logic with the OpenAI endpoint, and thus have default par

|

|||

- `top_p`: Defaults to `1` if not provided via preset,

|

||||

- `presence_penalty`: Defaults to `0` if not provided via preset,

|

||||

- `frequency_penalty`: Defaults to `0` if not provided via preset,

|

||||

- `stop`: Sequences where the AI will stop generating further tokens. By default, uses the start token (`||>`), the user label (`\nUser:`), and end token (`<|diff_marker|>`). Up to 4 sequences can be provided to the [OpenAI API](https://platform.openai.com/docs/api-reference/chat/create#chat-create-stop)

|

||||

- `user`: A unique identifier representing your end-user, which can help OpenAI to [monitor and detect abuse](https://platform.openai.com/docs/api-reference/chat/create#chat-create-user).

|

||||

- `stream`: If set, partial message deltas will be sent, like in ChatGPT. Otherwise, generation will only be available when completed.

|

||||

- `messages`: [OpenAI format for messages](https://platform.openai.com/docs/api-reference/chat/create#chat-create-messages); the `name` field is added to messages with `system` and `assistant` roles when a custom name is specified via preset.

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue